How Anthropic Built a Powerful Multi-Agent AI System: A Developer’s Guide

Hey there, fellow code wranglers! Ever felt like your AI is trying to juggle a dozen tasks but ends up dropping the ball? Well, buckle up, because Anthropic’s Multi-Agent AI System is here to show you how to turn your AI into a well-oiled team of super-smart assistants. It’s like giving your AI its own squad of mini-AIs to tackle complex problems faster and better. Let’s dive into this tech adventure with a grin and a cup of coffee!

Key Points:

- Anthropic’s Multi-Agent AI System likely uses multiple AI agents working together to tackle complex tasks, offering faster and more accurate results than single-agent systems.

- It seems to rely on an Orchestrator-Worker Architecture, where a lead agent coordinates specialized subagents, enhancing efficiency for research tasks.

- Prompt Engineering appears critical, with iterative refinements improving agent performance by up to 40%.

- The system may excel in breadth-first queries, achieving 90% better success rates than single-agent setups, though it uses significantly more computational resources.

- Challenges like coordination complexity and high token usage suggest careful design is needed for reliability and cost-effectiveness.

What Is a Multi-Agent AI System?

A Multi-Agent AI System is like a team of AI assistants working together, each handling a specific part of a task. Unlike a single AI trying to do everything, these systems split the work, making them faster and better at complex problems like research. Anthropic’s system, built for their AI Claude, uses this approach to explore topics across web searches, databases, and custom tools.

Why It Matters for Developers

For developers, understanding Anthropic’s system offers a blueprint for building smarter AI applications. It shows how to coordinate multiple AIs, optimize their behavior, and handle real-world challenges. Whether you’re creating a research tool or a customer support bot, these insights can help you level up your projects.

How Did Anthropic Do It?

Anthropic likely designed their system with a lead agent that acts like a project manager, assigning tasks to subagents. These subagents work in parallel, using tools like web searches or memory modules to gather and process information. The system’s success seems tied to careful Prompt Engineering, robust evaluation methods, and strategies to ensure reliability in production.

What Can You Learn?

Developers can take away practical tips: start with simple prompts, test with small datasets, and monitor AI decisions closely. While powerful, Multi-Agent Systems come with trade-offs like higher costs, so they’re best for high-value tasks. Anthropic’s approach suggests a balance of innovation and pragmatism.

Why Multi-Agent AI Systems Are Your New Best Friend

Imagine you’re trying to research the best programming languages for a new project. A single AI might slog through web pages, forums, and docs one by one, taking forever to give you an answer. Now picture a Multi-Agent AI System—it’s like having a team of researchers where one AI hunts for Python info, another digs into Java, and a third compares them, all at the same time. That’s the magic Anthropic has brewed with their AI Research System.

These systems shine because they:

- Work in Parallel: Multiple agents tackle different parts of a task simultaneously, slashing research time.

- Specialize: Each agent can focus on a specific skill, like searching or fact-checking, making the whole system smarter.

- Boost Accuracy: By cross-verifying results, agents reduce errors and deliver more reliable answers.

For developers, this is a game-changer. Whether you’re building a chatbot, a data analysis tool, or a research app, Multi-Agent Systems can make your AI faster, more versatile, and downright impressive. As Anthropic’s engineering team shared in their blog post, their system outperforms single-agent Claude by 90% on complex research tasks. That’s not just a win—it’s a knockout!

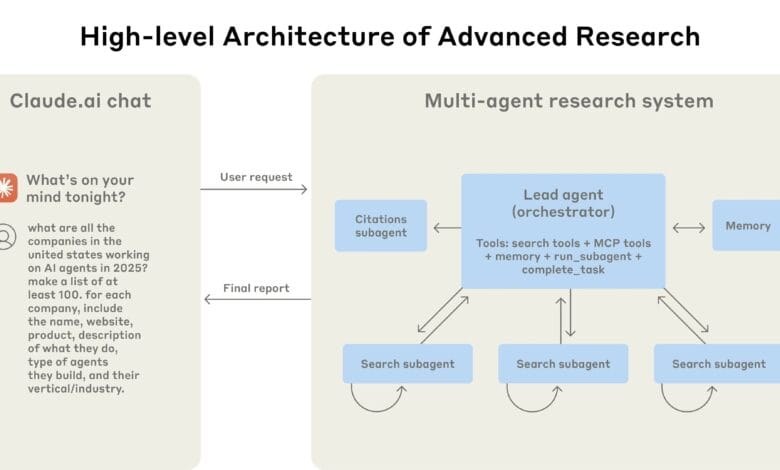

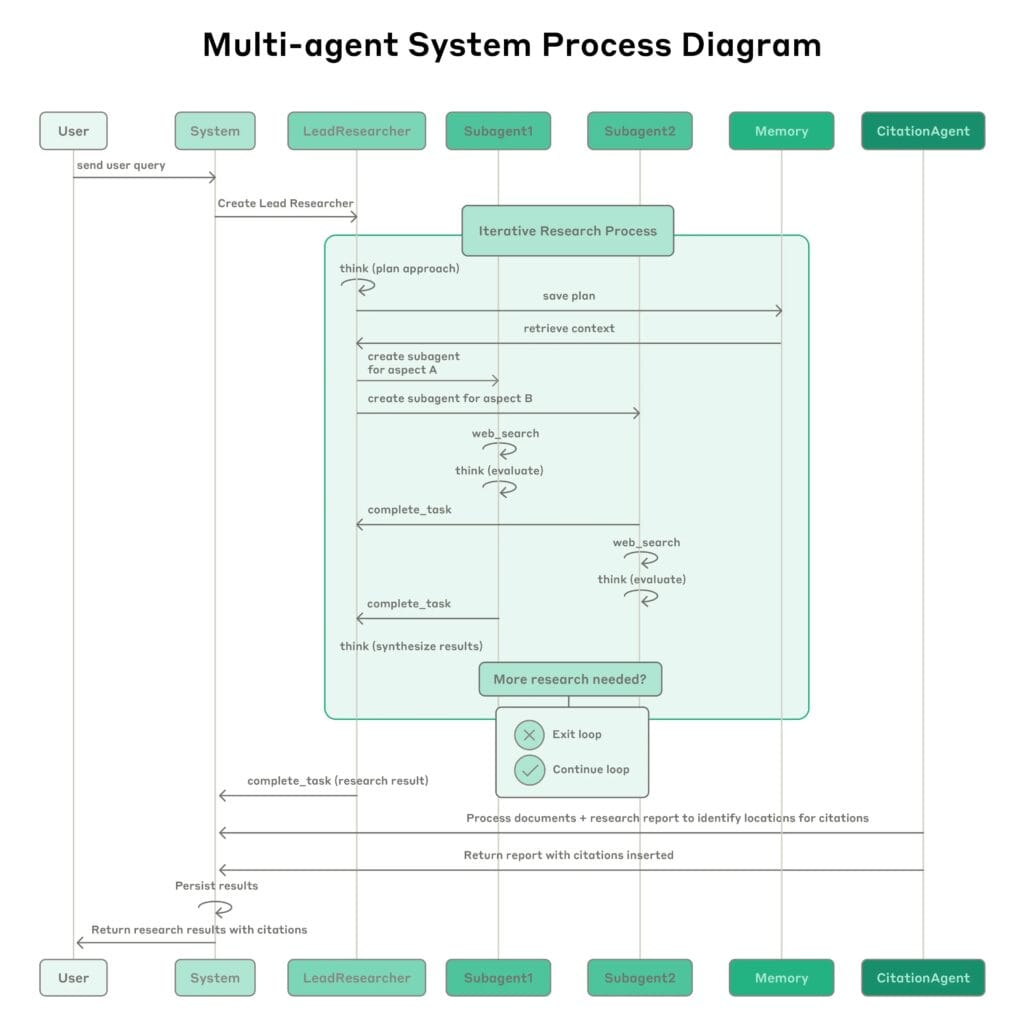

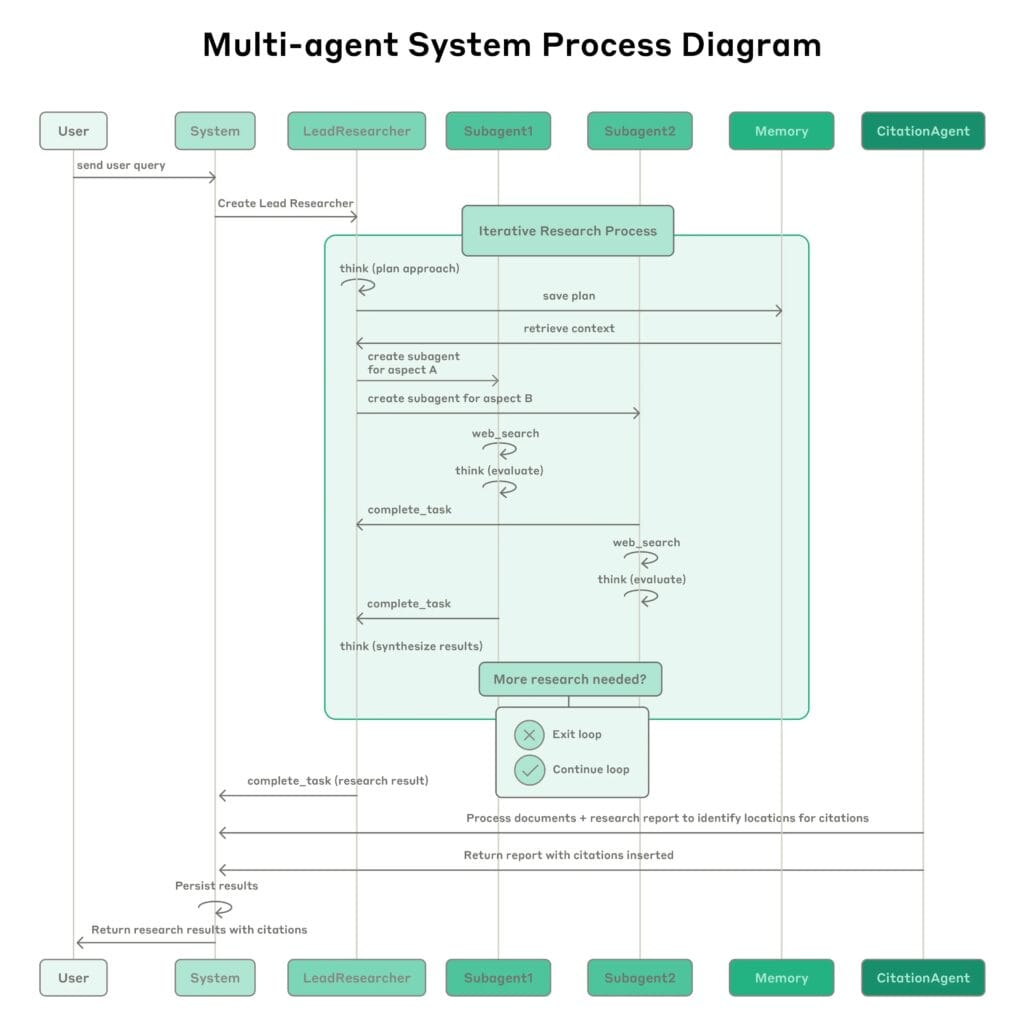

The Nuts and Bolts: Anthropic’s Orchestrator-Worker Architecture

At the heart of Anthropic’s Multi-Agent AI System is the Orchestrator-Worker Architecture. Think of it as a tech startup where a project manager (the orchestrator) assigns tasks to a team of specialists (the workers). Here’s how it works:

- Lead Agent (Orchestrator): This is the brainy boss. It takes your query, breaks it into bite-sized tasks, and hands them out to subagents. For example, if you ask, “What’s the best way to optimize a database?” the lead agent might split it into “research indexing techniques,” “explore caching,” and “find real-world examples.”

- Subagents (Workers): These are the doers. Each subagent gets a task and uses tools like web searches or database queries to get the job done. They work in parallel, so you’re not waiting for one to finish before the next starts.

- Parallel Tool Calling: The system can fire up 3-5 subagents at once, each using multiple tools. This cuts research time by up to 90%, according to Anthropic’s technical blueprint.

But it’s not just about speed. Anthropic added some clever tricks like extended thinking mode, where the lead agent plans carefully before acting, and interleaved thinking, where it adjusts its strategy on the fly. It’s like your AI is not just following a recipe but tasting the dish as it cooks.

Related: 2025 Guide: Exploring 9 Top AI Guides from OpenAI, Google, Anthropic

Key Components of the System

Let’s pop the hood and check out the main parts of Anthropic’s Multi-Agent AI System:

| Component | Description |

|---|---|

| Lead Agent | Coordinates tasks, analyzes queries, and delegates to subagents. |

| Subagents | Specialized AIs that handle specific tasks like searching or data analysis. |

| Tools | Includes web_search, web_fetch, create_subagent, memory, and think. |

| Memory Module | Persists context across tasks, keeping the system coherent over long sessions. |

| CitationAgent | Ensures all sources are properly credited, adding trust to the output. |

These components work together like a well-rehearsed band, with the lead agent as the conductor and subagents as the musicians, each playing their part to create a symphony of answers.

The Bumps in the Road: Challenges Anthropic Faced

Building a Multi-Agent AI System isn’t all sunshine and rainbows. Anthropic hit some serious roadblocks, but their solutions are pure gold for developers. Here’s what they faced:

- Coordination Chaos: More agents mean more complexity. Early versions spawned 50 subagents for simple queries, like a party where everyone’s talking at once. Anthropic streamlined this with better Prompt Engineering to teach agents when to delegate and when to chill.

- Error Pile-Up: Stateful agents (ones that remember past actions) can let errors snowball. Imagine an agent misreading a webpage and passing that mistake along. Anthropic added retries and checkpoints to catch these oopsies.

- Token Hunger: Multi-Agent Systems guzzle tokens—up to 15 times more than a standard chat. That’s like your AI ordering a triple espresso when a single shot would do. They optimized prompts and tools to keep costs manageable.

- Debugging Nightmares: Non-deterministic behaviors (where the AI misses obvious info) are like herding cats. Anthropic built robust monitoring to trace every decision, making debugging less of a wild goose chase.

These challenges highlight why Multi-Agent Systems need careful design. As Anthropic learned, it’s not about throwing more AIs at a problem—it’s about making them work smarter.

Lessons Learned: Your Cheat Sheet for Building Multi-Agent Systems

Anthropic’s journey from prototype to production is packed with wisdom. Here’s what they learned, served up Blurbify-style for easy digestion:

- Prompt Engineering Isn’t Dead: It’s the secret sauce. Anthropic refined prompts to teach agents how to delegate, scale effort to query complexity, and even improve their own prompts, cutting task times by 40%. Think of it as giving your AI a playbook for winning.

- Start Small, Dream Big: Test with just 20 queries to iron out kinks before scaling up. It’s like prototyping an app before launching it to millions.

- Let AI Judge AI: Use an LLM-as-judge to score outputs for accuracy and quality. It’s faster than human reviews but still needs a human touch for tricky cases.

- Keep It Reliable: Use rainbow deployments (rolling out different versions) and monitor every decision to catch bugs. It’s like having a dashboard for your AI’s brain.

- Pick Your Battles: Multi-Agent Systems rock for breadth-first queries (like researching a topic from multiple angles) but flop for tasks like coding, where coordination is tricky.

These lessons are your roadmap to building a Multi-Agent AI System that doesn’t just work but wows.

Real-World Applications: Where Multi-Agent Systems Shine

Anthropic’s system is built for research, but the Orchestrator-Worker Architecture can flex its muscles in tons of other areas. Here are some ideas to spark your creativity:

- Customer Support: Imagine a lead agent fielding a customer query and spawning subagents to check product specs, review order history, and draft a response. Faster replies, happier customers.

- Data Analysis: In finance, a lead agent could assign subagents to pull market data, run statistical models, and summarize trends, all in parallel.

- Content Creation: A writing AI could use subagents to research topics, fact-check claims, and suggest edits, turning a rough draft into a polished piece.

Let’s paint a picture with a simple example. Say you ask, “What’s the difference between Python and Java?” The lead agent might:

- Task Subagent 1: Search for Python’s key features.

- Task Subagent 2: Search for Java’s key features.

- Task Subagent 3: Compare the results and summarize.

In minutes, you get a clear, concise answer, not a novel-length ramble. That’s the power of Claude AI Agents in action.

Tips for Building Your Own Multi-Agent System

Ready to roll up your sleeves? Here’s how to get started:

- Learn the Architecture: Study Anthropic’s Orchestrator-Worker Architecture to understand how to coordinate agents. Their API docs are a great resource.

- Master Prompt Engineering: Write clear, specific prompts that guide agents on what to do and how to do it. Experiment and iterate to find what works.

- Test Early, Test Often: Start with a small set of queries and use both AI and human evaluations to refine your system.

- Monitor Like a Hawk: Log every decision to catch bugs and optimize performance. Tools like Anthropic’s observability features can help.

- Choose the Right Tools: Pick tools that match your use case, like web searches for research or database queries for data analysis.

For inspiration, check out tutorials like this Medium post on building agents with Claude’s Model Context Protocol (MCP).

Wrapping It Up: Your Next Steps

Anthropic’s Multi-Agent AI System is like a superhero team for AI—each agent brings its own powers, and together, they save the day on complex tasks. By leveraging the Orchestrator-Worker Architecture, nailing Prompt Engineering, and tackling challenges head-on, Anthropic has shown us how to build AI that’s not just smart but unstoppable.

For developers and tech enthusiasts, this is your call to action. Dive into Multi-Agent Systems, experiment with Anthropic’s tools, and build something amazing. Sure, there’ll be some debugging headaches, but as Anthropic’s journey proves, the payoff is worth it. So, what are you waiting for? Assemble your AI squad and start coding!

FAQ: Your Burning Questions Answered

- What’s the difference between a single-agent and a multi-agent system?

A single-agent system is one AI doing everything step-by-step, like a solo chef cooking a meal. A Multi-Agent System is a team of AIs, each handling a part of the task in parallel, like a kitchen crew. This teamwork makes complex tasks faster and more accurate. - How can I start building my own multi-agent system?

Begin with the Orchestrator-Worker Architecture. Use a platform like Anthropic’s API to create a lead agent and subagents. Start small, test with simple queries, and refine your prompts. Check out Anthropic’s API guide for a head start. - What are the main challenges in deploying multi-agent systems?

You’ll face coordination complexity, error handling, high token costs, and debugging non-deterministic behaviors. Anthropic tackled these with smart Prompt Engineering, retries, and robust monitoring. - How does Anthropic’s system compare to other multi-agent approaches?

Anthropic’s system focuses on research with parallel tool calling and dynamic planning. Other systems might use decentralized agents or target different tasks, but Anthropic’s emphasis on reliability and evaluation sets it apart. - Can multi-agent systems be used for tasks other than research?

Totally! From customer support to data analysis to content creation, Multi-Agent Systems can shine anywhere tasks can be split into parallel chunks. The key is designing the right architecture for your use case.

Sources We Trust:

A few solid reads we leaned on while writing this piece.

- How we built our multi-agent research system

- Anthropic shares blueprint for Claude Research agent using multiple AI agents in parallel

- What the makers of Claude AI say about Building Agents

- New capabilities for building agents on the Anthropic API

- Anthropic’s Claude Agents —Simple demo of building powerful AI multi-agents