What’s This MCP Thing Everyone Might Start Talking About?

Hey there, fellow code wranglers and AI enthusiasts! Have you ever found yourself in a coding pickle, trying to get your shiny new AI model—like ChatGPT or Claude—to actually use your specific data? You know, those moments when you’re like, “Hey, AI buddy, can you just look at this file on my server?” or “Can you analyze these 1000 code files from GitHub?” And then you end up spending hours (or days) building custom integrations, fiddling with APIs, or setting up file transfers just to make it work? Yeah, that’s the kind of headache we’re talking about today.

Well, there’s this relatively new concept popping up called the Model Context Protocol (MCP), and it’s aiming to fix that whole messy process. Think of it as the universal translator for AI and data—finally, a standard way for them to chat without you having to learn Klingon or whatever. But what exactly is MCP, and why should you, as a developer, care? Let’s dive in.

Key Takeaways

- MCP is an open standard for connecting AI assistants to data sources.

- It simplifies integration, reducing the need for custom coding.

- It uses a client-server architecture with MCP Hosts and Servers.

- Benefits include scalability, security, and flexibility.

- Developers can build MCP Servers for various data sources.

- Practical examples include integrating with GitHub and Notion.

- While powerful, MCP still requires understanding of underlying technologies for troubleshooting.

- The future looks bright for MCP as it gains adoption in the AI community.

Why It Matters?

For developers, MCP could mean less time spent on repetitive integration tasks and more time building cool features. It’s designed to work with different AI models and data sources, making it flexible and scalable.

What to Expect?

While MCP is promising, it’s still new, so you might run into some setup hiccups. However, with support from companies like Anthropic and an open-source community, it’s likely to become a go-to solution for AI integrations.

Why MCP is a Developer’s Secret Weapon

Let’s start with the basics: why do we even need something like MCP? Right now, integrating AI models with your data sources is like trying to connect a bunch of mismatched cables. You’ve got your AI model over here, your database or GitHub repo over there, and you’re stuck in the middle trying to figure out how to make them talk to each other. Every time you want your AI to do something useful—like analyze your code, pull data from your emails, or even just read a file—you often have to build a custom bridge. And let’s be real, that’s not fun. It’s repetitive, time-consuming, and honestly, kind of annoying.

MCP comes in like a hero in a cape, offering a standardized protocol that lets AI assistants connect seamlessly to various data sources—think content repositories, business tools, or development environments. Instead of building a custom connector for every single thing, MCP gives you a standardized way to plug your AI into your data. It’s like having a universal adapter for all your devices—no more fumbling with different plugs.

But why should you care? Because MCP isn’t just about making life easier for AI models; it’s about freeing up your time as a developer. Instead of spending hours on integrations, you can focus on building cool stuff—like that next-gen app or that killer feature your boss keeps asking for. Plus, it’s scalable. As you add more data sources, MCP grows with you, without you having to reinvent the wheel every time.

How Does MCP Work? (Let’s Get Technical, But Not Too Technical)

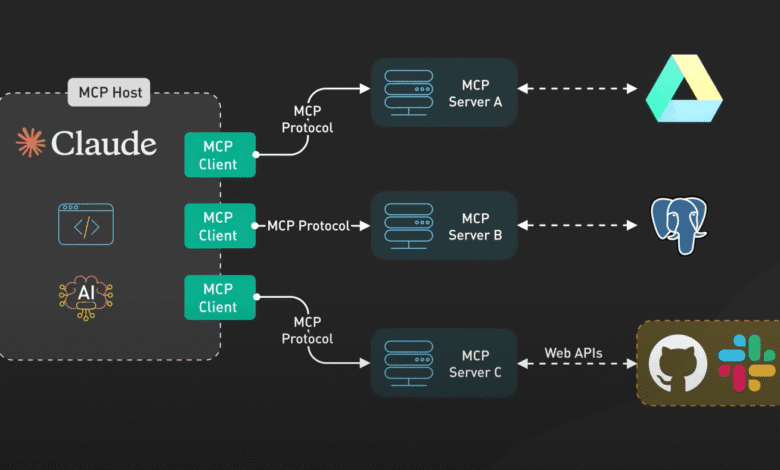

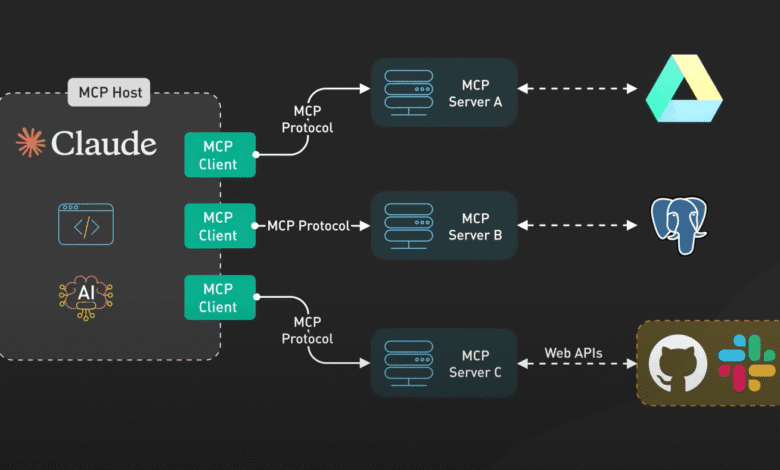

Alright, let’s peek under the hood. MCP uses a client-server architecture, which sounds fancy but is actually pretty straightforward. Here’s how it breaks down:

- MCP Host: This is where your AI lives. Think of it as the brain of the operation—Claude Desktop, a future version of ChatGPT, or even your custom AI tool. The Host has an MCP Client built into it.

- MCP Server: This is what sits with your data source. It’s like a little bridge that knows how to talk to your data—whether it’s GitHub, Gmail, or your company’s internal database. Each data source can have its own MCP Server.

The magic happens when the MCP Client (in the Host) talks to the MCP Server (with the data source) using the standardized MCP protocol. It’s like they’re speaking the same language, so you don’t have to translate. Behind the scenes, this might use technologies like JSON-RPC (a way to call functions remotely), but the key is that it’s standardized. No more custom APIs for every little thing.

Let’s say you want your AI to help with customer support emails. With MCP, you’d set up an MCP Server for Gmail. Then, you could tell your AI, “Hey, check my support emails in Gmail and draft replies based on our knowledge base.” The MCP Client securely talks to the Gmail MCP Server, fetches the emails, and hands them over to the AI as context. Done. No custom coding required.

Or, if you’re a coder, imagine telling your AI, “Find all uses of this function across our company’s GitHub repos.” With MCP, it can do that instantly, thanks to a GitHub MCP Server. No more manual API calls or convoluted scripts.

Benefits of Using MCP (Or, Why You’ll Love It)

Let’s talk about why MCP is such a game-changer:

- Simplification: No more building custom connectors for every data source. Once you set up an MCP Server, it’s plug-and-play. Your AI can access all your data sources through the same interface.

- Scalability: As you add more data sources, you just need to set up more MCP Servers. Your AI can then access all of them seamlessly.

- Security: Since the MCP Server handles the connection to the data source, you can manage permissions and access control more easily. Your AI only sees what you allow it to see.

- Flexibility: MCP supports different transport mechanisms, so whether your data is local (like files on your computer) or remote (like a cloud database), it can handle it.

- Future-Proofing: MCP is designed to be model-agnostic, meaning it can work with any AI model, not just Claude or GPT. As new models come out, you won’t have to rebuild your integrations.

- Community-Driven: Since it’s open-source, you can expect a growing ecosystem of pre-built MCP Servers for popular tools like Google Drive, Slack, GitHub, and more. Why reinvent the wheel when someone else has already done the hard work?

| Feature | Benefit |

|---|---|

| Open standard | Replaces fragmented integrations with a single protocol |

| Secure connections | AI accesses data reliably, improving response quality |

| Pre-built servers | Reduces custom coding for tools like GitHub, Slack |

| Model-agnostic | Works with various AI models, ensuring flexibility |

Setting Up MCP: A Developer’s Guide (With a Real Example)

Now, if you’re itching to try this out, here’s a quick overview of how to get started. (Don’t worry, I’ll keep it simple—no PhD required.)

Step 1: Understand the Basics

- MCP Host: This is where your AI lives (e.g., Claude Desktop).

- MCP Server: This is what you build to connect your AI to your data source.

You’ll need to set up an MCP Server for each data source you want your AI to access. But don’t worry—there are pre-built ones for popular tools like GitHub and Google Drive.

Step 2: Set Up Your Environment

- Make sure you have Python 3.10 or later installed.

- Install the

uvpackage manager (it’s likepipbut cooler). - Create a new project and virtual environment with

uv initanduv venv. - Install dependencies:

mcp[cli],requests,python-dotenv, and any other libraries you need for your data source (e.g.,notion-clientfor Notion).

Step 3: Set Up Environment Variables

- You’ll need tokens for your data sources (e.g., GitHub token, Notion API key).

- Store them in a

.envfile for security.

Step 4: Write Your Integration Code

- For each data source, write functions that interact with its API.

- For example, for GitHub, you might write a function to fetch pull request details.

Step 5: Implement Your MCP Server

- Use the

mcplibrary to create an MCP Server. - Register your functions (tools) so the AI can call them.

- Run the server with

mcp.run(transport="stdio").

Step 6: Connect to Your AI

- In your AI tool (like Claude Desktop), look for the MCP integration option.

- Connect to your MCP Server, and you’re good to go.

Real Example: Building an MCP Server for GitHub and Notion

Let’s say you want to build an MCP Server that lets your AI analyze GitHub pull requests and save notes to Notion. Here’s how you’d do it, based on a DataCamp tutorial:

- Set Up the Environment:

- Install Python 3.10+.

- Install

uvand create a project:uv init pr_reviewer; cd pr_reviewer. - Create and activate a virtual environment:

uv venv; source .venv/bin/activate(Mac/Linux) or.venv\Scripts\activate(Windows). - Install dependencies:

uv add "mcp[cli]" requests python-dotenv notion-client.

- Set Up Environment Variables:

- Create a

.envfile with:GITHUB_TOKEN=your_github_tokenNOTION_API_KEY=your_notion_api_keyNOTION_PAGE_ID=your_notion_page_id

- Generate tokens from GitHub and Notion’s developer settings.

- Create a

- Write Integration Code:

- Create

github_integration.pywith a function to fetch PR details using the GitHub API. - Create

notion_integration.pywith a function to create Notion pages.

- Create

- Implement MCP Server:

- Create

pr_analyzer.pywith a class that initializes an MCP Server and registers tools for fetching PRs and creating Notion pages. - Run the server with

python pr_analyzer.py.

- Create

- Connect to Claude Desktop:

- In Claude Desktop, look for the 🔌 (plug) icon to connect to your MCP Server.

- Now you can ask your AI to analyze PRs or save notes to Notion!

See? It’s not as scary as it sounds. And once you’ve set it up, you can reuse it for other projects. For more details, check out the official MCP documentation.

Related topic: Software Development Project Management: Top 6 Free Tools

Real-World Applications (Or, Why MCP is Cool)

Let’s talk about some practical use cases where MCP shines:

- Code Assistance: Imagine telling your AI, “Find all instances of this function across our company’s GitHub repos.” With MCP, it can do that instantly, without you writing a single line of code.

- Customer Support: Your AI can directly access your support emails in Gmail and draft responses based on your knowledge base. No more copying and pasting emails manually.

- Data Analysis: Need to analyze data from your database? Your AI can query it directly through MCP, saving you hours of manual work.

- Workflow Automation: Automate tasks like creating new repos, adding code, or even deploying applications by connecting your AI to your CI/CD tools.

The possibilities are endless, and they’re all about making your AI smarter and more useful without you having to do extra work.

| Use Case | How MCP Helps |

|---|---|

| Code Assistance | Accesses GitHub repos for code analysis |

| Customer Support | Fetches emails from Gmail for drafting replies |

| Data Analysis | Queries databases for insights |

| Workflow Automation | Integrates with CI/CD tools for automation |

Challenges and Considerations (Because Nothing’s Perfect)

Now, don’t get me wrong—MCP is awesome, but it’s not without its challenges. Since it’s still new (introduced in November 2024), there might be some teething issues. For example, some developers have reported connection problems when setting up MCP clients, like with the GitHub MCP client in Cursor. This just goes to show that even with standards, things can go wrong, and you still need to understand the underlying technologies to troubleshoot.

Security is another big consideration. Since MCP allows your AI to access your data sources, you need to ensure the connections are secure and that you’re not exposing sensitive information. The good news is that MCP Servers handle authentication, so you can control access tightly.

And let’s not forget: while MCP simplifies integration, it doesn’t mean you can stop learning tech. You still need to understand how APIs work, how databases store data, and what protocols like JSON-RPC or gRPC are. When things break (and they will), your AI won’t magically fix them for you. You’ll need those skills to debug and maintain your system.

Related: What is Machine Learning? A Simple Explanation for Beginners

The Future of MCP (Or, Why It’s Going to Be Big)

MCP is still in its early days, but the potential is huge. It’s backed by Anthropic (the folks behind Claude), and it’s open-source, which means it’s likely to gain traction quickly. As more developers and companies adopt it, we can expect to see a rich ecosystem of MCP Servers for various data sources, making integration even easier.

In the future, MCP could become as standard as HTTP is for web communications. It’s that fundamental. Imagine a world where your AI can seamlessly connect to any data source, anywhere, without you having to lift a finger. That’s the dream, and MCP is making it a reality.

Early adopters like Block and Apollo are already using MCP, and tools like Zed, Replit, Codeium, and Sourcegraph are integrating it for better functionality. With developer toolkits for deploying remote production MCP Servers planned, the future looks bright.

Related article: Google Firebase Studio AI: 9 Must See Features (FREE to Use)

Conclusion: MCP—Your New Best Friend

So, there you have it—MCP, the Model Context Protocol, is set to revolutionize how we connect AI models to our data sources. It’s like giving your AI the keys to your data kingdom, but with a standardized lock that works everywhere. No more custom integrations, no more reinventing the wheel. Just plug-and-play AI goodness.

Whether you’re a developer looking to enhance your AI’s capabilities or just curious about the latest in AI tech, keep an eye on MCP. It’s going to be big. Now, go forth and make your AI smarter with MCP!

FAQ Section

Got questions? Here are some answers to the most common ones:

- What is the difference between MCP and traditional APIs?

Traditional APIs require you to write custom code for each integration, whereas MCP provides a standardized way for AI models to interact with data sources, reducing the need for custom implementations. - Can I use MCP with any AI model?

MCP is designed to be model-agnostic, so in theory, yes. However, it’s currently most prominently supported by Anthropic’s Claude. - How secure is MCP?

Security is handled at the MCP Server level, where you can implement authentication and authorization as needed. It’s up to you to ensure secure practices. - Is MCP only for large enterprises, or can small developers use it?

MCP is open-source and accessible to all developers, from small startups to large enterprises. - What are some popular MCP servers available?

There are pre-built MCP servers for Google Drive, Slack, GitHub, Git, Postgres, and Puppeteer, among others. - Do I need to be an expert in networking or APIs to use MCP?

While understanding the basics of networking and APIs can help, MCP is designed to simplify the integration process, so even developers with moderate experience should be able to set it up with the help of documentation and tutorials. - Where can I learn more about MCP?

Check out the official documentation at modelcontextprotocol.io, and look for tutorials and guides on platforms like DataCamp or Anthropic’s blog.

There you go—a deep dive into MCP, written just for you, in a tone that’s as friendly as your favorite coding buddy. Now go build something amazing with your AI!

Related: Optimizing AI Models: RAG, Fine-Tuning, or Just Asking Nicely?

Key Citations:

- Anthropic: Introducing the Model Context Protocol

- Model Context Protocol: Introduction

- Spring AI: MCP Overview

- DataCamp: Model Context Protocol (MCP) Tutorial