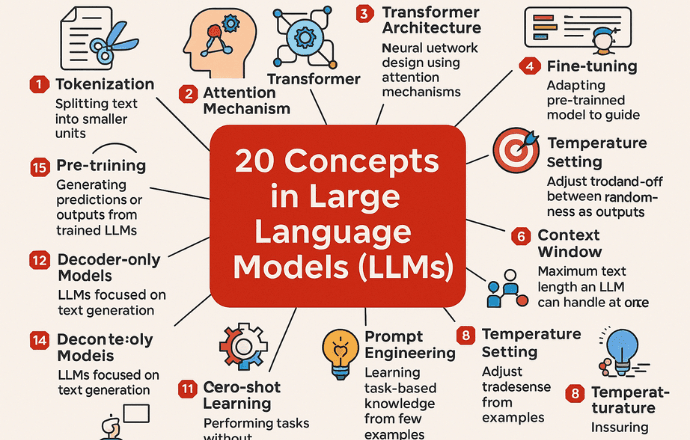

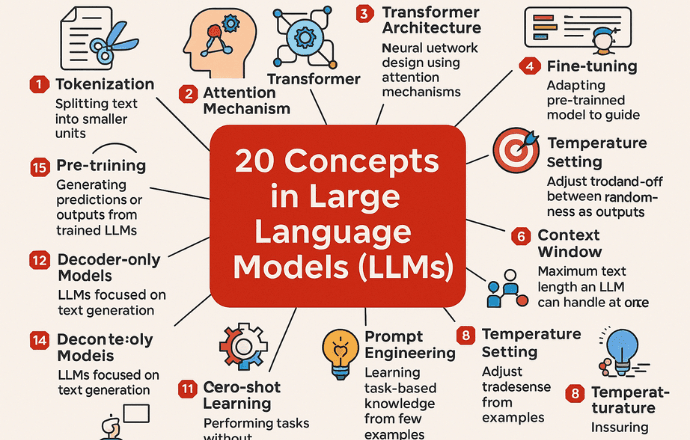

20 Key Concepts in Large Language Models (LLMs)

Large Language Models (LLMs) are revolutionizing the world of artificial intelligence by enabling machines to read, write, translate, and even reason in natural human language. These models power applications like chatbots, AI assistants, coding copilots, search engines, and educational tools. Whether you’re using ChatGPT, Google Bard, or Claude, you’re interacting with a Large Language Model (LLM).

But what exactly makes these models so intelligent and versatile? How do they process language, make decisions, and generate coherent responses across different topics?

To answer these questions, we need to look under the hood and understand the foundational components that make LLMs work. From tokenization and attention mechanisms to prompt engineering, fine-tuning, and reinforcement learning, each concept plays a critical role in the model’s performance.

In this article, we’ll explore 20 key concepts in Large Language Models (LLMs). Whether you’re a beginner, developer, researcher, or just curious about how AI understands language, this guide will give you a solid overview of what powers the most advanced language models today.

Understanding the Core of Large Language Models (LLMs)

- What are LLMs? LLMs are AI systems that understand and generate human-like text, powering tools like chatbots and code assistants.

- Key concepts: Research suggests 20 foundational concepts, like tokenization, attention mechanisms, and transformer architecture, drive LLM functionality.

- Why it matters: These concepts help developers and enthusiasts grasp how LLMs work, enabling better use and innovation.

- Complexity acknowledged: While powerful, LLMs can produce errors (hallucinations) and require careful tuning for ethical use.

What Are Large Language Models?

Large language models, or LLMs, are like super-smart digital librarians who can read, write, and answer questions in human-like ways. They’re behind tools like ChatGPT and Grok, helping with everything from writing emails to coding. Understanding their 20 key concepts—think of them as the ingredients in a tech recipe—lets you peek under the hood and use these tools more effectively.

Why Developers Need These Concepts

For developers, these concepts are like a cheat sheet for building or tweaking AI-powered apps. Knowing how tokenization splits text or how attention mechanisms focus on context can help you craft better prompts or fine-tune models for specific tasks. It’s about making tech work smarter for you, not just marveling at its magic.

A Quick Peek at the Concepts

From breaking text into tokens to ensuring models align with human values, these 20 concepts cover the nuts and bolts of LLMs. They’re not just jargon—they’re practical tools for anyone curious about AI, whether you’re coding your next project or just geeking out over tech trends.

1. Tokenization: Slicing Text Like Pizza

Tokenization is like chopping a big pizza into bite-sized slices. It’s the process of breaking text into smaller units called tokens—words, subwords, characters, or even punctuation.

- Why it matters: LLMs can’t gulp down whole paragraphs. Tokens are the building blocks they process.

- How it works: There are three main types:

- Word-based: Splits text into words, e.g., “The quick fox” → [‘The’, ‘quick’, ‘fox’]. Struggles with rare words.

- Character-based: Each character is a token, e.g., [‘T’, ‘h’, ‘e’, ‘ ‘, ‘q’, …]. Great for unknown words but slow.

- Subword-based: A hybrid using Byte-Pair Encoding (BPE), e.g., “unbelievable” → [‘un’, ‘believ’, ‘able’]. Balances speed and flexibility.

- Fun fact: Subword tokenization is why LLMs can handle weird words like “supercalifragilisticexpialidocious” without breaking a sweat.

Next time you chat with an AI, know it’s munching on tokens! Tokenization Explained

2. Attention Mechanism: The Model’s Focus Mode

Attention is how LLMs decide which words matter most in a sentence. It’s like your brain zoning in on “coffee” when someone mentions a morning meeting.

- Why it matters: It helps LLMs understand context, making them better at tasks like translation or answering questions.

- How it works: The star is self-attention, where each word “looks” at others to compute its importance:

- Words get query, key, and value vectors.

- Attention scores are calculated via dot products, scaled, and softened with softmax.

- The model sums up weighted values for each word’s final representation.

- Pro tip: Multi-head attention lets the model focus on different parts of a sentence at once, like having multiple spotlights.

Attention is why LLMs don’t just read—they understand. Self-Attention Guide

3. Transformer Architecture: The LLM Backbone

Transformers are the architectural superheroes behind LLMs. Introduced in the 2017 paper Attention Is All You Need, they’re why models like GPT and BERT exist.

- Why it matters: Transformers process text in parallel, capturing long-range context unlike older models (RNNs).

- Key components:

- Encoder: Reads and understands input text.

- Decoder: Generates output text, using masked attention to avoid peeking at future tokens.

- Self-attention: Connects words across the sequence.

- Feedforward networks: Add depth to processing.

- Positional encoding: Tracks word order.

- Fun fact: Transformers aren’t just for text—they power vision and speech AI too!

Transformers are the MVP of AI, making LLMs fast and smart. Transformer Overview

4. Parameter Size: The Model’s Brainpower

Parameter size is the number of adjustable weights in an LLM—think of them as brain synapses.

- Why it matters: More parameters mean more learning capacity, but also more computing power needed.

- Examples:

- GPT-2: 1.5 billion parameters.

- GPT-3: 175 billion parameters.

- GPT-4: Even more (exact number’s a mystery).

- Caveat: Bigger isn’t always better. Overfitting or high costs can be issues.

Parameters are why LLMs can tackle complex tasks, but they’re a balancing act. Parameter Scaling

5. Fine-tuning: Customizing Your LLM

Fine-tuning is like tailoring a suit—it takes a pre-trained LLM and tweaks it for a specific task.

- Why it matters: Makes LLMs more accurate for niche jobs, like coding or medical Q&A.

- How it works: Train the model on a smaller, task-specific dataset to adjust its weights.

- Risk: Over-fine-tuning can make the model too specialized, losing its general smarts.

Fine-tuning is your ticket to a bespoke AI. Fine-tuning Guide

6. Prompt Engineering: Asking Smart Questions

Prompt engineering is the art of crafting inputs to get the best LLM outputs. It’s like knowing exactly how to phrase a Google search.

- Why it matters: Good prompts mean better answers, saving time and frustration.

- Tips:

- Be specific: “Explain transformers” beats “Tell me about AI.”

- Use examples: Show the model what you want.

- Experiment: Small tweaks can make big differences.

- Example: “Write a Python function to sort a list” vs. “Write a Python function to sort a list with comments explaining each step.”

Master prompt engineering, and you’ll wield LLMs like a pro. Prompt Tips

7. Context Window: The Model’s Memory

The context window is how many tokens an LLM can “remember” at once—its short-term memory.

- Why it matters: A larger window means better coherence in long texts or chats.

- Examples:

- GPT-2: 1024 tokens.

- GPT-3: 2048 tokens.

- Newer models: Even longer.

- Trade-off: Bigger windows need more computing power.

Think of it as the model’s mental notepad. Context Window Info

8. Temperature Setting: Creativity vs. Precision

Temperature controls how random or focused an LLM’s output is—its creativity dial.

- Why it matters: Adjusts the vibe of responses.

- Settings:

- Low (0.1): Precise, predictable outputs (great for code).

- High (1.0+): Creative, varied outputs (fun for stories).

- Example: Low temperature might give a formal email; high might write a quirky one.

Play with temperature to match your task’s mood. Temperature Settings

9. Embedding: Words as Numbers

Embeddings turn words into numerical vectors, capturing their meaning and relationships.

- Why it matters: Lets LLMs “understand” language mathematically.

- How it works: Words like “king” and “queen” get similar vectors, while “apple” is far off.

- Cool trick: “King” – “man” + “woman” ≈ “queen.”

Embeddings are the secret sauce of language comprehension. Embedding Basics

10. Few-shot Learning: Learning by Example

Few-shot learning is when an LLM learns a task from just a few examples in the prompt.

- Why it matters: Saves time when you can’t fine-tune.

- Example: “Translate ‘hello’ to French: ‘bonjour.’ Now translate ‘goodbye’:” → “au revoir.”

It’s like teaching with flashcards—quick and effective. Few-shot Learning

11. Zero-shot Learning: No Examples Needed

Zero-shot learning is when an LLM tackles a task without any examples, relying on its pre-training.

- Why it matters: Shows off the model’s generalization skills.

- Example: “Summarize this article” works because the model already knows summarization.

It’s like asking a trivia buff a random question—they just know. Zero-shot Learning

12. Chain-of-Thought Prompting: Step-by-Step Thinking

Chain-of-thought prompting asks LLMs to reason step by step, improving accuracy on complex tasks.

- Why it matters: Reduces errors in logic-heavy questions.

- Example: “What’s 17 + 23? Step 1: 10 + 20 = 30. Step 2: 7 + 3 = 10. Total: 40.”

It’s like giving the model a whiteboard to work out problems. Chain-of-Thought

13. Inference: From Prompt to Answer

Inference is when a trained LLM generates output based on your input.

- Why it matters: It’s the “using” phase of AI, critical for real-time apps.

- Tips: Optimize with quantization or distillation for faster, cheaper inference.

Inference is where the magic becomes practical. Inference Guide

14. Self-attention: Context King

Self-attention (a subset of attention) lets each word in a sequence “talk” to others, capturing context.

- Why it matters: It’s the heart of transformers, enabling rich understanding.

- How it works: Same as attention, but focused within one sequence.

Self-attention is why LLMs get the big picture. Self-Attention Deep Dive

15. Pre-training: The School of Language

Pre-training is where LLMs learn general language skills from massive datasets.

- Why it matters: Builds the foundation for all tasks.

- Examples:

- GPT-3: Trained on 45TB of text.

- BERT: 3.3 billion words.

- Risk: Can inherit biases from data.

Pre-training is like an LLM’s college years. Pre-training Insights

16. Decoder-only Models: Text Generators

Decoder-only models, like GPT, focus on generating text one token at a time.

- Why it matters: Ideal for creative tasks like writing or coding.

- How it works: Uses self-attention to predict the next token.

They’re the storytellers of LLMs. Decoder-only Models

17. Encoder-Decoder Models: Input to Output

Encoder-decoder models, like T5, handle tasks needing both input understanding and output generation.

- Why it matters: Perfect for translation or summarization.

- How it works: Encoder processes input; decoder generates output.

They’re the translators of the AI world. Encoder-Decoder Models

18. Hallucination: When LLMs Make Stuff Up

Hallucination is when LLMs confidently spit out false info.

- Why it matters: Can mislead users, especially in critical applications.

- Example: Asking about a 2025 event might get a made-up answer.

- Fixes: Better data, fact-checking, or prompt tweaks.

Hallucinations remind us LLMs aren’t perfect. Hallucination Issues

19. RLHF: Teaching LLMs Manners

Reinforcement Learning with Human Feedback (RLHF) fine-tunes LLMs using human ratings.

- Why it matters: Makes models safer and more aligned with human values.

- How it works: Humans score outputs; the model learns to maximize good scores.

RLHF is like teaching an AI to say “please” and “thank you.” RLHF Explained

20. Alignment: Keeping LLMs Ethical

Alignment ensures LLMs follow human values and ethical standards.

- Why it matters: Prevents harmful or biased outputs.

- Methods: RLHF, fine-tuning, safety filters.

Alignment is the guardrail for responsible AI. Alignment Research

Related: AI Models Ranked by IQ: Which One Is Truly the Smartest?

Conclusion: Your LLM Adventure Begins

Wow, you made it through all 20 concepts—high five! These are the gears that make LLMs tick, from tokenizing text to aligning with ethics. Whether you’re building the next AI app or just curious, this knowledge is your superpower.

LLMs are evolving fast, with longer context windows, better reasoning, and more ethical focus. So, go experiment—tweak prompts, try fine-tuning, or ask an LLM to write you a poem. The future of AI is bright, and you’re now part of it!

FAQ

Q: How do I start using LLMs as a developer?

A: Use platforms like Hugging Face or OpenAI’s API. They offer pre-trained models and simple interfaces for tasks like text generation.

Q: Can I fine-tune an LLM myself?

A: Yes! Platforms like Hugging Face support fine-tuning on your dataset. Ensure it’s clean and relevant for best results.

Q: How do I reduce LLM hallucinations?

A: Use clear prompts, verify outputs, and choose models with safety features to minimize false info.

Q: Are bigger models always better?

A: Not necessarily. Larger models are powerful but resource-heavy. Pick one that fits your task and budget.

Q: What’s the difference between encoder-only and decoder-only models?

A: Encoder-only (e.g., BERT) excels at understanding text; decoder-only (e.g., GPT) is great for generating text.

Q: How can I improve my prompts?

A: Be specific, include examples, and adjust temperature for creativity or precision.

Q: Are LLMs safe?

A: They can be, but check for biases and use safety filters to ensure ethical outputs.

| Concept | Description | Why It Matters |

|---|---|---|

| Tokenization | Breaks text into tokens | Enables text processing |

| Attention Mechanism | Focuses on relevant words | Improves context understanding |

| Transformer Architecture | Processes text in parallel | Powers modern LLMs |

| Parameter Size | Number of model weights | Determines learning capacity |

| Fine-tuning | Customizes model for tasks | Enhances task-specific performance |

| Prompt Engineering | Crafts effective inputs | Boosts output quality |

| Context Window | Tokens model can handle | Affects coherence |

| Temperature Setting | Controls output randomness | Balances creativity vs. precision |

| Embedding | Numerical word representations | Enables language understanding |

| Few-shot Learning | Learns from few examples | Saves training time |

| Zero-shot Learning | Performs without examples | Shows generalization |

| Chain-of-Thought | Encourages step-by-step reasoning | Improves complex task accuracy |

| Inference | Generates output from input | Critical for real-time use |

| Self-attention | Connects words in sequence | Captures context |

| Pre-training | Learns from massive data | Builds general knowledge |

| Decoder-only Models | Generates text | Ideal for creative tasks |

| Encoder-Decoder Models | Processes input/output | Great for translation |

| Hallucination | Generates false info | Challenges accuracy |

| RLHF | Uses human feedback | Enhances safety |

| Alignment | Ensures ethical outputs | Promotes responsible AI |