Why You Should Use Proxy for Web Scraping

In today’s data-driven world, web scraping is a game-changer for businesses, developers, data analysts, and marketers. It’s the process of automatically extracting data from websites—think product prices, customer reviews, or competitor strategies. But as websites get smarter with anti-bot measures, scraping without the right tools can feel like hitting a brick wall. That’s where proxies come in. Using a proxy for web scraping is not just a technical trick—it’s a necessity to keep your projects running smoothly.

This article explains why proxies are essential for web scraping, the challenges of scraping without them, the types of proxies available, and how ProxyScrape can be your go-to solution. Whether you’re a developer building web scraping tools, a data analyst gathering insights, or a marketer tracking trends, this guide will show you how proxies can make your work easier, faster, and more reliable.

Why You Should Use a Proxy for Web Scraping

- Proxies are essential for successful web scraping, helping you avoid IP blocks and CAPTCHAs.

- They mask your IP address, ensuring anonymity and access to geo-restricted content.

- Different proxy types—datacenter, residential, rotating—suit various scraping needs.

- ProxyScrape offers reliable, affordable proxies to streamline your scraping projects.

What Are Proxies and Why Do They Matter?

Proxies act like a middleman between your computer and the internet. When you scrape a website, your requests go through the proxy’s IP address instead of your own, keeping your identity hidden. This is crucial for web scraping, as websites often block IPs that send too many requests. Proxies also let you access region-specific data and speed up your scraping by distributing requests across multiple IPs.

Common Challenges Without Proxies

Scraping without proxies is like trying to sneak into a guarded building with your face on camera. Websites can detect your IP and block you, throw up CAPTCHAs, or limit your access based on location. For example, a marketer scraping competitor prices might get blocked after a few requests, stalling their project. Proxies help you avoid these roadblocks, making your scraping smoother and more efficient.

Types of Proxies for Scraping

- Datacenter Proxies: Affordable and fast, but easier for websites to detect. Great for simple tasks like SEO monitoring.

- Residential Proxies: Use real residential IPs, making them harder to detect but more expensive. Ideal for sensitive or geo-restricted scraping.

- Rotating Proxies: Change IPs with each request, perfect for large-scale scraping to avoid blocks.

Why ProxyScrape?

ProxyScrape provides a range of proxies—datacenter, residential, and rotating—with high uptime and tools like a Proxy Checker to ensure performance. Their affordable plans and user-friendly dashboard make them a top choice for developers, analysts, and marketers. Try ProxyScrape to power your scraping projects.

The Challenges of Web Scraping Without a Proxy

Web scraping without a proxy is like trying to sneak into a guarded building with your face on camera. Websites are designed to protect their data, and they use several tactics to stop scrapers in their tracks. Here are the main challenges you’ll face:

- IP Blocking: Websites monitor IP addresses for unusual activity. Sending multiple requests from the same IP can trigger an IP blocking mechanism, halting your scraping efforts. For example, if you’re scraping an e-commerce site for pricing data, you might get blocked after just a few requests.

- CAPTCHAs: To verify human users, websites deploy CAPTCHAs—those annoying puzzles or image selections. Without proxies, you’ll hit CAPTCHAs frequently, slowing down or stopping your scraping process.

- Geo-Restrictions: Some websites restrict access based on location. If you’re trying to scrape region-specific content, like local pricing or reviews, you’ll be locked out without a proxy from that region.

- Rate Limiting: Websites often cap the number of requests from a single IP in a given time. This limits how much data you can collect, especially for large-scale projects.

Imagine you’re a marketer scraping competitor websites to compare prices across regions. Without proxies, you might get blocked after a handful of requests, leaving your project incomplete. Proxies solve these issues by masking your IP and distributing requests across multiple IPs, keeping your scraping undetected and uninterrupted.

What Is a Proxy and How Does It Work?

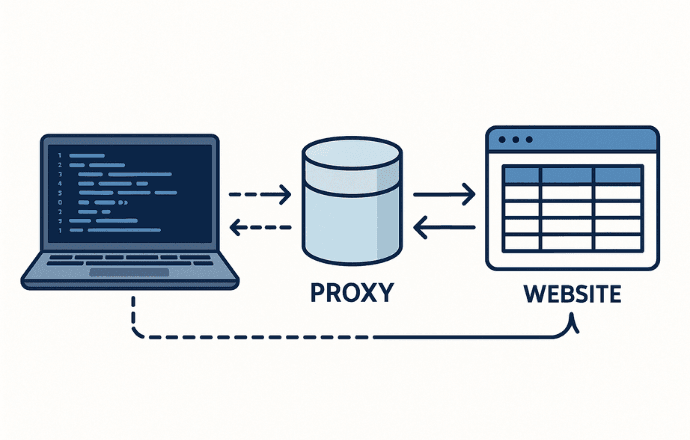

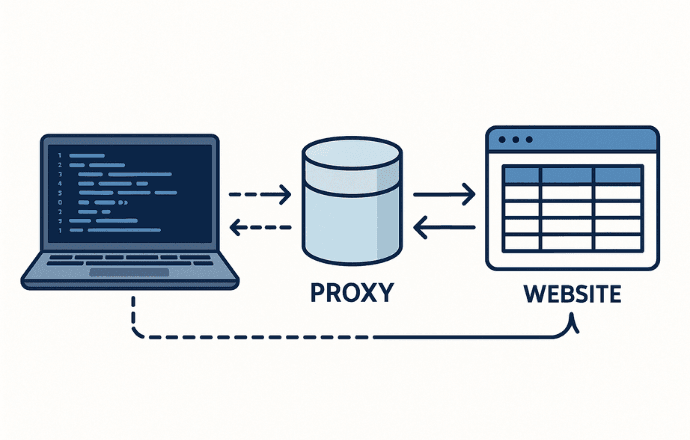

A proxy is a server that acts as a middleman between your computer (or your web scraping tools) and the internet. Here’s how it works in simple terms:

- When you send a request to a website, it goes to the proxy server first.

- The proxy forwards the request to the website using its own IP address.

- The website responds to the proxy, which then sends the data back to you.

This process hides your real IP address, making it look like the request came from the proxy instead of you. For web scraping, this is a game-changer—it helps you avoid CAPTCHAs, bypass IP blocking, and access geo-restricted content.

Beyond scraping, proxies are useful for other tasks, like accessing region-locked streaming services, improving online privacy, or caching data to speed up browsing. But for web scraping, their ability to mask your identity and distribute requests is what makes them indispensable.

Types of Proxies Explained (Datacenter, Residential, Rotating)

Not all proxies are created equal. Choosing the right type depends on your project’s needs, budget, and the level of anonymity required. Here’s a breakdown of the main types:

| Proxy Type | Source | Cost | Detection Risk | Best Use Case |

|---|---|---|---|---|

| Datacenter | Hosted on data center servers | Affordable | Higher | SEO monitoring, price comparison |

| Residential | Real residential IPs from ISPs | Expensive | Lower | Geo-restricted content, sensitive scraping |

| Rotating | Changes IP per request or periodically | Varies | Very Low | Large-scale scraping, avoiding blocks |

1. Datacenter Proxies

- What They Are: These proxies come from data centers and are provided by proxy services. They’re not tied to real user locations.

- Pros: Fast and cost-effective, making them great for budget-conscious projects.

- Cons: Higher risk of detection since websites can flag data center IPs as non-human.

- Use Case: Ideal for tasks like SEO monitoring or scraping public data where anonymity isn’t critical. For example, a developer tracking keyword rankings can use datacenter proxies to gather data efficiently.

2. Residential Proxies

- What They Are: These use IP addresses assigned to real residential devices by Internet Service Providers (ISPs).

- Pros: Mimic real user traffic, making them harder to detect.

- Cons: More expensive than datacenter proxies.

- Use Case: Perfect for scraping sensitive sites or accessing geo-restricted content. For instance, a data analyst scraping regional pricing data would benefit from residential proxies to appear as a local user.

3. Rotating Proxies

- What They Are: These proxies automatically switch IP addresses with each request or after a set period.

- Pros: Minimize the risk of IP blocking by constantly changing IPs.

- Cons: Can be complex to manage without the right tools.

- Use Case: Essential for large-scale scraping projects, like a marketer collecting data from multiple e-commerce sites across different regions.

Understanding these types helps you pick the right proxy for your project, balancing cost, anonymity, and performance.

Related: What Is a Proxy Server? A Beginner’s Guide to Internet Privacy

Why Proxies Are Essential for Web Scraping

Proxies are the backbone of successful web scraping. Here’s why they’re non-negotiable:

1. Avoiding IP Blocks

Websites use anti-bot systems to detect and block IPs sending too many requests. Proxies distribute your requests across multiple IPs, making it harder for sites to flag you as a bot. This is especially crucial for large-scale projects where you’re scraping thousands of pages.

2. Accessing Geo-Restricted Content

If you’re scraping data that’s only available in certain regions—like local pricing or reviews—proxies provide IPs from those locations, bypassing geo-restrictions. For example, a business analyst studying market trends in Europe can use European proxies to access region-specific data.

3. Maintaining Anonymity

Proxies hide your real IP, protecting your identity and keeping your scraping activities private. This is vital when scraping competitive or sensitive data, like a retailer monitoring competitor pricing.

4. Improving Performance

By using multiple IPs, proxies allow you to send more requests simultaneously without hitting rate limits. This speeds up your scraping process, letting you collect data faster and more efficiently.

5. Reducing CAPTCHAs

While proxies don’t eliminate CAPTCHAs entirely, they reduce their frequency by making your requests look like they’re coming from different users. Rotating proxies are particularly effective for this.

For example, a developer building a tool to scrape job listings might use proxies to collect data from multiple job boards without getting blocked. Similarly, a marketer tracking social media trends can use proxies to access region-specific posts, ensuring comprehensive data collection.

How ProxyScrape Solves These Problems

When it comes to choosing a proxy provider, ProxyScrape stands out as a reliable, affordable, and user-friendly option. Here’s how they address the challenges of web scraping:

- Datacenter Proxies: ProxyScrape offers over 40,000 HTTP and Socks5 proxies with 99.9% uptime, unlimited bandwidth, and unlimited concurrent connections. These are perfect for cost-effective, large-scale scraping tasks.

- Residential Proxies: With 120 million+ back-connect rotating proxies, ProxyScrape provides high anonymity and a 99.9% success rate. These are ideal for sensitive or geo-restricted scraping, with a response time of just 0.8 seconds.

- Premium Proxies: Offering over 40,000 proxies with a 99% success rate, these include features like IP authentication and API integration, making them easy to integrate with your web scraping tools.

- Dedicated Proxies: For exclusive access, ProxyScrape’s dedicated proxies provide private IPs, unlimited bandwidth, and speeds up to 1Gbps, backed by 24/7 live chat support.

- Additional Tools: ProxyScrape’s user-friendly dashboard lets you manage proxies, view performance analytics, and access API keys. Their Proxy Checker tests proxy speed, performance, and security, ensuring you always use the best proxies. They also offer a free proxy list, updated every 5 minutes, for smaller projects.

ProxyScrape’s commitment to quality—99.9% uptime, ethically sourced proxies, and 24/7 support—makes it a top choice for developers, analysts, and marketers. Whether you’re scraping for market research or building a data pipeline, ProxyScrape has the tools to keep your projects running smoothly. Get started with ProxyScrape.

Best Practices for Using Proxies in Web Scraping

To maximize the effectiveness of proxies in your web scraping projects, follow these best practices:

- Choose the Right Proxy Type:

- Use datacenter proxies for cost-effective tasks with low anonymity needs.

- Opt for residential proxies for high-anonymity or geo-restricted scraping.

- Leverage rotating proxies for large-scale projects to avoid blocks.

- Manage Your Proxy Pool:

- Rotate proxies regularly to distribute requests and minimize detection.

- Use ProxyScrape’s Proxy Checker to monitor performance and replace underperforming proxies.

- Respect Website Terms:

- Check the website’s terms of service to ensure your scraping is legal and ethical.

- Avoid overwhelming servers by respecting request rate limits.

- Use Proxy Management Tools:

- ProxyScrape’s dashboard and API simplify proxy management and integration, saving you time and effort.

- Test and Optimize:

- Test proxies before deploying them to ensure they meet your speed and reliability needs.

- Adjust your scraping strategy based on website behavior to stay under the radar.

By following these practices, you’ll ensure your scraping projects are efficient, reliable, and compliant.

Conclusion

Proxies are the key to unlocking the full potential of web scraping. They help you avoid CAPTCHAs, bypass IP blocking, access geo-restricted content, and maintain anonymity, all while boosting performance. Whether you’re a developer building web scraping tools, a data analyst collecting insights, or a marketer tracking competitors, proxies make your work faster and more reliable.

ProxyScrape is the best proxy provider for your scraping needs, offering a range of proxies—datacenter, residential, and rotating—with tools to simplify management and ensure success. Don’t let blocks or restrictions slow you down. Sign up with ProxyScrape today and take your web scraping to the next level.

FAQ

1. What is the difference between datacenter and residential proxies?

Datacenter proxies are hosted on data center servers and are cheaper but more likely to be detected. Residential proxies use real residential IPs, offering higher anonymity but at a higher cost.

2. How do I choose the best proxy for my scraping project?

Consider your project’s scale, anonymity needs, and budget. Residential proxies are best for sensitive or geo-restricted tasks, while datacenter proxies suit budget-friendly projects. Rotating proxies are ideal for large-scale scraping.

3. Is it legal to use proxies for web scraping?

The legality depends on the website’s terms of service. Always ensure your scraping complies with these terms to avoid legal issues.

4. How can I avoid getting blocked while scraping?

Use proxies to distribute requests across multiple IPs, rotate them regularly, and respect rate limits. Rotating proxies are particularly effective for avoiding IP blocking.

5. What are rotating proxies, and when should I use them?

Rotating proxies change your IP with each request or periodically, reducing the risk of blocks. Use them for large-scale or high-frequency scraping projects.

6. How does ProxyScrape ensure proxy quality?

ProxyScrape guarantees 99.9% uptime, conducts regular performance checks, and offers 24/7 support to ensure reliable, high-quality proxies.