From Chatbots to Multi-Agent Systems: How AI Has Evolved

Key Points

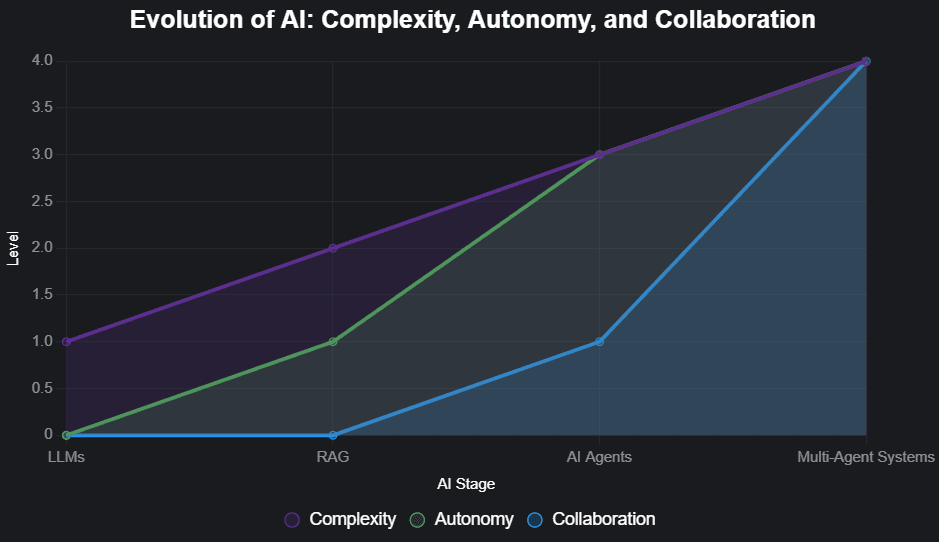

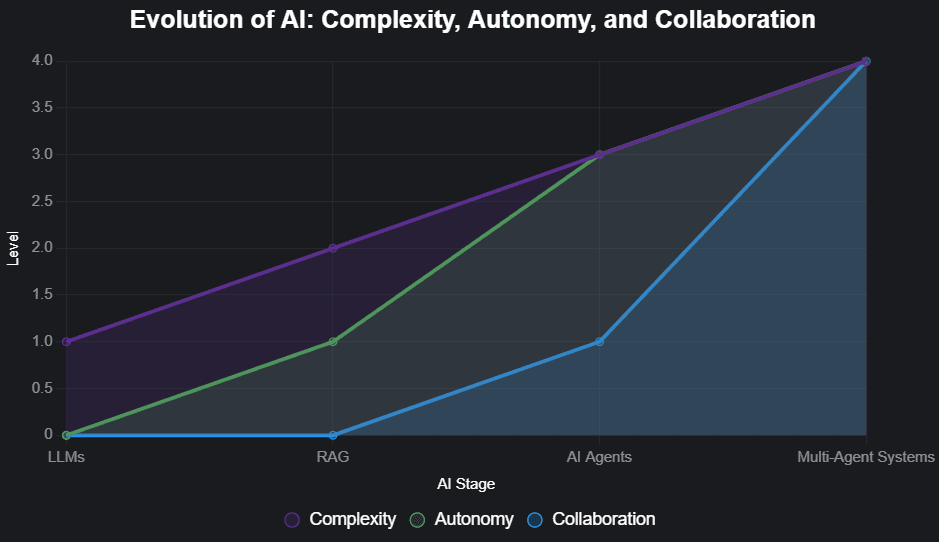

- AI has evolved from simple chatbots using Large Language Models (LLMs) to complex Multi-Agent Systems, enabling more sophisticated tasks.

- Each stage builds on the last: LLMs generate text, RAG adds external knowledge, AI Agents act autonomously, and Multi-Agent Systems collaborate.

- Developers benefit by using these tools for tasks like automation, customer support, and complex problem-solving.

- Research suggests Multi-Agent Systems are the future for handling large-scale, collaborative tasks, though designing them can be challenging.

Remember when AI was just fancy autocomplete? Those days are long gone, my friend. Today, we’re talking about AI that can chat, think, plan, and even team up with other AIs to get stuff done. Welcome to the wild world of Multi-Agent Systems, where the future of intelligent tools is being coded right now.

In this post, we’re taking a joyride through the evolution of AI tools for developers—from the early days of chatbots powered by Large Language Models (LLMs) to today’s collaborative Multi-Agent Systems. We’ll break down each stage, show you what they’re good for, and toss in some tips to help you build smarter systems. So, grab your coffee, and let’s dive in!

Quick Summary

- What Multi-Agent Systems do: They let AI agents collaborate to tackle big, complex tasks.

- Why AI’s evolving this way: To overcome the limits of solo models and harness specialized skills.

- What devs gain at each stage:

- LLMs: Killer text generation and understanding.

- RAG: Access to external knowledge, fewer made-up answers.

- AI Agents: Autonomy to act in the real world.

- Multi-Agent Systems: Scalability for problems needing diverse expertise.

What Are Multi-Agent Systems?

Multi-Agent Systems are like a team of AI specialists working together. Each agent has its own skills, and they communicate to solve problems too big for one AI alone. Think of it as a coding team where one person handles the front-end, another the back-end, and someone else manages the database—together, they build something amazing.

Why Does This Evolution Matter?

This progression from chatbots to collaborative AI systems means developers can now create tools that are smarter, more autonomous, and capable of tackling real-world challenges. Whether you’re building a customer service bot or automating a complex workflow, understanding these stages helps you pick the right tool for the job.

What’s Next for Developers?

The evidence leans toward Multi-Agent Systems becoming a game-changer for developers. They’re perfect for tasks requiring diverse expertise, but they’re not always easy to set up. Start exploring frameworks like ReAct or ReWOO to dip your toes into agent-based AI, and keep an eye on emerging tools to stay ahead.

From LLMs to Agentic Intelligence: A Quick Tour

What Are LLMs?

Large Language Models (LLMs) are the OGs of modern AI. Think of them as super-smart librarians who’ve read every book in the library (or at least, a massive chunk of the internet). Models like GPT-3, BERT, and T5 use transformer architecture—a fancy way of saying they pay attention to context in text to generate human-like responses.

They’re trained on huge datasets, so they can write emails, translate languages, or even whip up a poem about your cat. But they’re not perfect. They’re like that friend who’s great at storytelling but sometimes gets the facts wrong.

Why They Changed the Game (But Didn’t Finish It)

LLMs blew our minds by making chatbots and virtual assistants feel almost human. They powered tools like early versions of ChatGPT, customer service bots, and content generators. But here’s the catch: they’re stuck with what they learned during training. No internet access, no real-time updates, and sometimes they hallucinate—spouting plausible but totally made-up nonsense.

Plus, they’re text-only. Want an AI to book a flight or check your calendar? LLMs are like, “Uh, I can write you a nice email about it, but that’s it.” That’s why we needed the next step.

RAG: Giving AI a Search Engine Brain

How Retrieval-Augmented Generation Works

Retrieval-Augmented Generation (RAG) is like giving your chatbot Google powers—except it won’t open 47 tabs and forget what it was looking for. RAG combines the text-generating magic of LLMs with a retrieval system that pulls relevant info from a database or external source.

Here’s the gist:

- Retriever: Searches a database for documents or snippets related to your query using techniques like dense retrieval (think embeddings) or classic methods like TF-IDF.

- Generator: Takes those retrieved bits and weaves them into a coherent answer using an LLM.

This setup grounds the AI’s responses in real data, making them more accurate and less likely to invent facts.

Best RAG Use Cases for Developers

RAG shines when you need precise, up-to-date answers. Here are some sweet spots:

- Knowledge-based chatbots: Answer questions about company policies or product specs.

- Question-answering systems: Pull facts from manuals or wikis for tech support.

- Document summarization: Condense long reports by grabbing key sections.

For example, imagine a chatbot for a tech company. Without RAG, it might guess the latest software version. With RAG, it pulls the exact version from the company’s knowledge base. Boom—accuracy!

Pros and Caveats

| Pros | Caveats |

|---|---|

| More accurate answers | Depends on quality of retrieved data |

| Access to external knowledge | Can be computationally heavy |

| Reduces hallucinations | Slight latency for real-time apps |

Tip: Use libraries like LangChain or Haystack to build RAG systems fast. They handle the retriever-generator dance for you.

AI Agents: Autonomy with a Purpose

What Are AI Agents?

AI Agents are like chatbots that got a promotion. They don’t just talk—they act. An agent can perceive its environment (via text, images, or APIs), reason about what to do, and take actions to achieve goals. Think of them as digital assistants with a to-do list and the skills to check it off.

Unlike chatbots, which are all about text, agents can interact with the world—calling APIs, controlling devices, or even navigating websites.

Why They’re More Than a Chatbot

Chatbots are great for banter, but agents get stuff done. Need to book a flight? An agent can browse travel sites, fill out forms, and confirm your ticket. Want to automate a workflow? An agent can pull data from one system, process it, and push it to another.

They’re built with three key parts:

- Perception: Observing the environment (e.g., reading a webpage).

- Reasoning: Deciding what to do next.

- Action: Executing tasks (e.g., clicking a button).

Related: Airweave: Open Source Agent Search for Smarter AI Workflows

Frameworks: ReAct, ReWOO Explained Simply

Building agents from scratch is tough, so frameworks like ReAct and ReWOO make life easier.

- ReAct (Reasoning and Acting): This framework has the agent think and act in a loop. It reasons about the next step, takes an action, checks the result, and repeats. It’s great for tasks where feedback is key, like navigating a website. Check out ReAct’s paper for the nerdy details.

- ReWOO (Reasoning WithOut Observation): ReWOO plans all actions upfront, then executes them without checking results mid-way. This saves tokens (and cash) when calling LLMs and can handle tool failures better. See ReWOO’s GitHub for code examples.

Example: Here’s a pseudocode snippet for a ReAct agent booking a flight:

while task_not_complete:

state = observe_environment() # Check webpage

action = reason(state) # Decide to click "Search Flights"

execute(action) # Perform click

Tip: Start with LangGraph for ReWOO or ReAct implementations. It’s developer-friendly and integrates with LLMs.

Multi-Agent Systems: The Future Is Collaborative

What Are Multi-Agent Systems?

Multi-Agent Systems (MAS) are like an AI Avengers team. Multiple agents, each with unique skills, work together to solve problems too big for one hero. They communicate, share info, and coordinate actions, often showing emergent behavior—think of a flock of birds moving as one.

For example, one agent might handle natural language, another crunches data, and a third makes decisions. Together, they’re unstoppable.

Why Solo Agents Aren’t Enough Anymore

Solo agents are awesome, but they’re like a one-person band. They can only do so much. Complex tasks—like running a customer service platform or simulating a supply chain—need diverse expertise. Multi-Agent Systems split the work, making them more efficient and scalable.

Plus, they’re robust. If one agent fails, others can pick up the slack, unlike a single agent that might crash the whole show.

Real Examples

- Computer-Using Agent (CUA): OpenAI’s CUA, part of their Operator tool, navigates websites using natural language commands. It’s a single agent but can be part of a multi-agent setup where one CUA books flights while another handles hotel reservations. Learn more at OpenAI’s CUA repo.

- Collaborative AI: In research, systems like Anthropic’s multi-agent research tool let agents tackle open-ended tasks, like finding business opportunities or debugging code. See Anthropic’s blog.

Example: A multi-agent system for customer support might look like:

| Agent | Role |

|---|---|

| NLP Agent | Understands customer queries |

| Knowledge Agent | Retrieves info from a database |

| Action Agent | Updates customer records |

Why This Evolution Matters to You

Use Cases for Devs Today

Here’s how you can use these tools now:

- Automated support: Combine RAG for accurate answers and agents for ticket resolution.

- Personal assistants: Build agents to manage schedules or shop online.

- Content creation: Use LLMs for drafts and RAG for fact-checking.

- Complex automation: Deploy multi-agent systems for data analysis and reporting.

When to Use What

| Tool | Best For |

|---|---|

| LLM | Text generation, chatbots |

| RAG | Knowledge retrieval, Q&A |

| AI Agent | Task automation, real-world interaction |

| Multi-Agent | Complex, collaborative tasks |

What to Watch For

- New frameworks: Tools like LangChain and Semantic Kernel are making agents easier to build.

- Integration: Expect tighter links with existing software and APIs.

- Ethics: As agents get more autonomous, ensure they act responsibly.

- Scalability: Multi-agent systems will need to handle thousands of agents for enterprise use.

Conclusion

We’ve gone from AI that could barely string a sentence together to systems that collaborate like a dream team. LLMs gave us chatbots, RAG made them smarter, agents added autonomy, and Multi-Agent Systems brought collaboration to the table.

As a developer, this is your playground. Whether you’re tweaking a chatbot or building a multi-agent masterpiece, the tools are here. So, don’t just build smart tools—build collaborative ones. The future’s multi-agent, and it’s calling your name.

FAQ

- What’s the difference between AI Agents and Multi-Agent Systems?

Agents are solo players that act autonomously. Multi-Agent Systems are teams of agents working together, often with specialized roles. - Can I build a multi-agent system without deep ML expertise?

Yes! Frameworks like LangChain abstract the heavy lifting, letting you focus on logic and integration. - Is RAG still useful if I use agents?

Totally. RAG can power an agent’s knowledge base, making its actions more informed. - What are the top frameworks for building AI agents?

ReAct, ReWOO, LangChain, and Semantic Kernel are solid choices. - Do Multi-Agent Systems work in real time?

Many do, with agents communicating instantly for tasks like live support or simulations. - How do I know when to switch to a multi-agent system?

If your task needs diverse skills or scales beyond one agent’s capacity, go multi-agent. - Can these systems scale for enterprise workloads?

Yes, with proper design, they can handle thousands of agents and massive tasks.