Meta AI and Privacy: A Threat You Can’t Ignore

Imagine this: your social media posts, comments, and even your photos — quietly used to train one of the world’s most powerful AIs. That’s not science fiction. That’s Meta. Yeah, it’s a bit like finding out your diary’s been photocopied and handed out at a tech conference. Meta AI, the shiny new ChatGPT competitor from the folks who brought you Facebook and Instagram, is making waves—not just for its cool features, but for the privacy storm it’s stirring up.

So, why should you care? Well, every post, like, or comment you’ve ever made on Meta’s platforms could be fueling their AI models. While Meta claims it’s all to make your digital life better, privacy advocates are waving red flags, saying this could erode your control over your data. Let’s dive into what Meta AI is, why it’s raising eyebrows, and what you can do to keep your digital self safe—all in Blurbify’s signature “now I get it” style.

Key Points

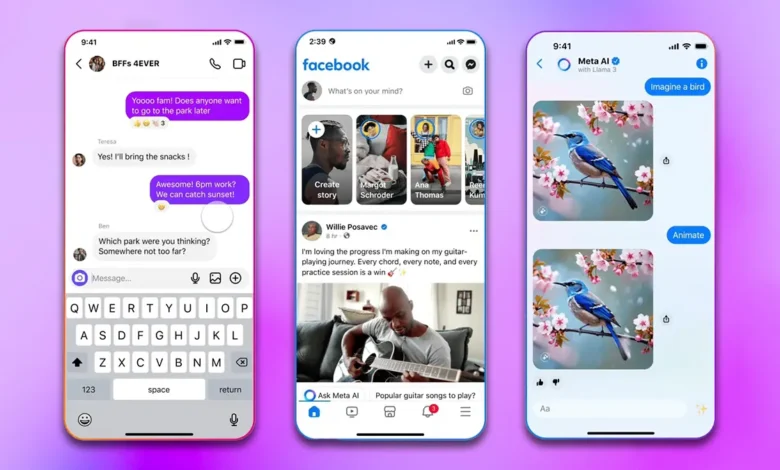

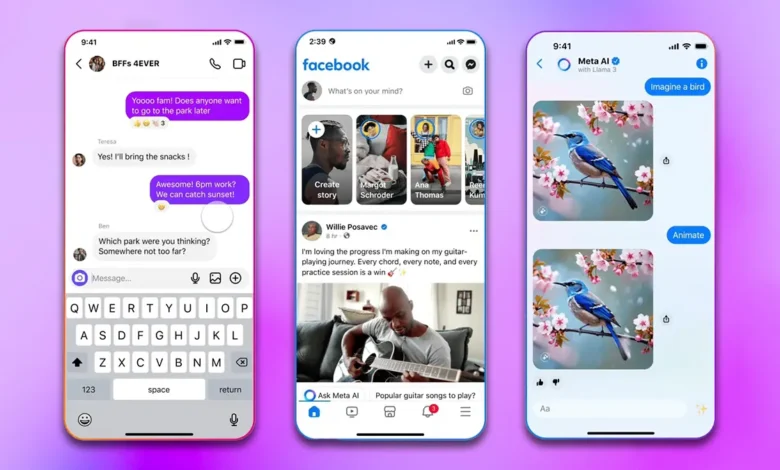

- Meta AI Overview: Meta AI is an AI assistant integrated into Facebook, Instagram, WhatsApp, and Messenger, offering features like answering questions and generating images, but it relies heavily on user data for training.

- Privacy Concerns: Evidence suggests Meta uses public posts, comments, and images to train its AI, often without explicit user consent, raising concerns about data privacy and autonomy.

- Controversy: Privacy advocates, like noyb, argue Meta’s opt-out model violates laws like GDPR, while Meta insists it has safeguards like excluding private messages and offering opt-out options.

- User Action: You can take steps to protect your privacy, but the complexity of opting out and the permanence of data in AI models make it a tricky landscape.

What is Meta AI?

Meta AI is like that super-smart friend who always has an answer—except this friend lives in your social media apps. It’s a standalone AI assistant that can chat, generate images, and pull real-time info, all while being woven into Meta’s platforms. For developers, it’s a fascinating example of a large language model (LLM) in action, built on Meta’s Llama 2 and trained on massive datasets to understand everything from slang to historical trivia.

Why the Privacy Fuss?

The catch? Meta AI needs data—lots of it. Public posts, comments, and images from Facebook and Instagram are fair game, and while Meta says private messages are off-limits, the line between public and private is blurrier than a low-res meme. Privacy advocates worry that without clear consent, users are losing control over their digital footprints. Plus, once your data trains an AI, it’s like trying to unbake a cake—good luck getting it out.

What Can You Do?

You’re not powerless! Reviewing privacy settings, opting out (if you’re in the EU), and being mindful of what you post can help. But let’s be real: completely dodging Meta’s data net is tough. Staying informed and pushing for transparency is your best bet.

Meta AI and Privacy: A Threat You Can’t Ignore

Picture this: you’re scrolling through Instagram, double-tapping a cute dog pic, when suddenly you realize that post might be teaching an AI to be smarter than you. Welcome to the world of Meta AI, where your social media life is both the playground and the fuel for Meta’s latest tech adventure. As developers and tech enthusiasts, we love cool tools, but Meta AI’s privacy implications are giving us pause. Is it a game-changer or a privacy nightmare? Let’s break it down in true Blurbify style—clear, fun, and straight to the point.

What is Meta AI? Your New Digital Sidekick

Meta AI is Meta’s answer to ChatGPT, a versatile AI assistant baked into Facebook, Instagram, WhatsApp, and Messenger. It’s like having a super-smart intern who can answer questions, whip up images, and even spice up your group chats. Launched with off the back of Meta’s Llama 2 model, it’s designed to understand everything from pop culture to quantum physics (okay, maybe not that last one, but it’s close).

For developers, Meta AI is a masterclass in LLMs. It uses vast datasets to learn patterns, making it great at generating human-like responses or photorealistic images. You can ask it to write code snippets, explain algorithms, or even create a meme-worthy image of your cat in a spacesuit. But here’s the rub: all that intelligence comes from data—your data.

Blurbify Blurb: Meta AI is like a super-smart barista who knows your coffee order by heart, but might also be jotting down your life story for a novel.

How Meta Uses Your Data: The Not-So-Fine Print

To make Meta AI as clever as it is, Meta needs data—petabytes of it. They’re using public content from Facebook and Instagram, like posts, comments, and images, to train their models. In May 2024, Meta confirmed this includes anything you’ve shared publicly since 2007, even from that cringey phase when you posted song lyrics as your status.

Here’s where it gets dicey: Meta claims private messages are excluded, but privacy advocates argue the distinction is fuzzier than a hipster’s beard. For example, your “public” likes or follows can reveal personal details, and the AI can infer sensitive info from seemingly innocent posts. Posted a pic from your vacation? The AI might deduce your travel habits.

For techies, this is a reminder of how LLMs work: they’re data sponges, absorbing patterns to predict what you’ll say or like next. But once your data’s in the model, it’s there for good. Think of it like trying to remove a single ingredient from a baked cake—impossible.

Blurbify Blurb: Your data’s like glitter—once it’s out there, it’s everywhere, and you’ll never get it all back.

Meta’s Privacy Safeguards: Safety Net or Smoke Screen?

Meta isn’t clueless about the backlash. They’ve rolled out safeguards to ease your mind:

- No Private Data: They swear private messages and posts are off-limits for AI training.

- Opt-Out Option: EU users can fill out a form to opt out, though it’s buried deeper than a bug in a 10,000-line codebase.

- Transparency Tools: AI-generated images get watermarks, and you can delete chat history with commands like “/reset-ai.”

- Internal Reviews: Meta says they’ve got a crack team checking for privacy compliance.

Sounds reassuring, right? Well, hold your commits. The opt-out process is a labyrinth—think filling out a tax form in a foreign language. You need to provide detailed reasons, and even then, past data might already be part of the AI’s “brain.” Plus, those “public” posts can still reveal more than you’d like, like your political leanings or favorite coffee shop.

Blurbify Blurb: Meta’s safeguards are like a screen door on a submarine—better than nothing, but don’t bet on staying dry.

Related: Open Source AI: Power to the People or a Hacker’s Paradise?

Why Privacy Advocates Are Losing It

Privacy watchdogs, especially in Europe, are sounding alarms louder than a bug in production. Groups like noyb have filed complaints in 11 countries, claiming Meta’s approach violates GDPR. Here’s what’s got them riled:

- No Consent: Meta uses an opt-out model, meaning your data’s used unless you say no. Many users don’t even know this is happening.

- Massive Data Scope: They’re tapping everything public since 2007, including dormant accounts. That old MySpace vibe you left on Facebook? It’s back, baby.

- No Deletion Option: Once your data trains the AI, it’s part of the model forever. GDPR’s “right to be forgotten” is more like “right to be vaguely remembered.”

- Blurry Lines: Even without private messages, public data can reveal sensitive stuff, like your health or beliefs.

The Dutch Data Protection Authority and others are watching closely, with the Irish Data Protection Commission leading the charge since Meta’s EU base is in Ireland. In 2024, Meta paused its plans after regulator pushback, but by April 2025, they were back at it with EDPB approval.

Blurbify Blurb: Privacy advocates are like code reviewers spotting bugs Meta hopes you’ll ignore—persistent and not wrong.

The Legal Lowdown: What’s Happening?

The regulatory scene is a bit like debugging a legacy system—messy but necessary. Here’s the rundown:

- 2024: Meta announced AI training plans but hit pause in the EU after noyb and regulators cried foul.

- April 2025: Meta resumed training with EDPB approval, promising notifications and opt-out forms.

- Ongoing: European watchdogs are still skeptical, questioning if Meta’s opt-out model is legal under GDPR.

Outside the EU, like in the US, privacy laws are looser, giving Meta more wiggle room. This creates a global patchwork where your data’s fate depends on your zip code.

Blurbify Blurb: Regulators are like sysadmins trying to patch a server while it’s still running—good luck!

Why This Matters: Your Digital Soul’s at Stake

This isn’t just about Meta—it’s about the future of privacy in an AI-driven world. As developers, we know AI’s potential: better apps, smarter tools, maybe even self-writing code (we can dream). But without guardrails, it’s a slippery slope to a world where your data’s a commodity, not a right.

Imagine a future where every click, post, or emoji is used to train AI that predicts your next move—or sells you stuff you don’t need. Without clear consent and deletion options, you’re not a user; you’re a data mine.

Blurbify Blurb: Your data’s like your GitHub repo—valuable, but you don’t want just anyone forking it without permission.

How to Protect Yourself: Tips for the Privacy-Conscious

Worried? You’re not helpless. Here’s how to lock down your digital life:

- Check Privacy Settings: Limit who sees your posts on Facebook and Instagram. Private accounts are your friend.

- Opt Out (If You Can): EU folks, dig through Meta’s settings for the opt-out form. It’s a slog, but worth it.

- Think Before You Post: Public posts are AI fodder, so skip sharing your life’s plot twists.

- Use Encryption: WhatsApp’s end-to-end encryption keeps private chats private.

- Stay Woke: Follow privacy news to know your rights and Meta’s latest moves.

| Action | Effort Level | Impact |

|---|---|---|

| Adjust Privacy Settings | Low | High (limits data exposure) |

| Opt Out of AI Training | High | Medium (past data may remain) |

| Be Selective with Posts | Medium | High (reduces public data) |

| Use Encrypted Messaging | Low | High (secures private chats) |

| Stay Informed | Medium | High (empowers better choices) |

Blurbify Blurb: Protecting your privacy’s like debugging—tedious but saves you from a crash later.

Real-World Example: The EU Pushback

In June 2024, noyb filed complaints across Europe, accusing Meta of GDPR violations. They pointed out that Meta’s plan to use all user data since 2007—without opt-in consent—was a privacy no-no. The Irish DPC stepped in, leading to a pause in Meta’s AI training. By April 2025, Meta was back with EDPB approval, but the opt-out process remained a headache, proving that user pushback can make waves, even if it’s not a total win.

Blurbify Blurb: The EU’s like that one teammate who flags every code smell—annoying but often right.

Wrapping Up: Don’t Be Just Data

Meta AI is a tech marvel, but its privacy risks are real. From scooping up your posts to dodging clear consent, it’s a reminder that in the AI age, your data’s power comes with responsibility. As developers and tech lovers, we can marvel at the code while demanding better from the coders.

So, next time you’re liking a post or chatting on WhatsApp, remember: you’re not just a user—you’re a digital citizen. Stay curious, stay cautious, and let’s keep pushing for a tech world that’s as empowering as it is exciting.

Blurbify Blurb: Meta AI’s cool, but your privacy’s cooler. Guard it like your favorite IDE plugin.

Related: Meta Releases Llama 4: Multimodal AI to Compete with Top Models

FAQ

- What’s Meta AI?

Meta AI is a ChatGPT-like assistant in Facebook, Instagram, WhatsApp, and Messenger, handling tasks like answering questions and generating images. It’s powered by user data, which is where the privacy debate kicks in. - What data does Meta use for AI training?

Public posts, comments, and images from Facebook and Instagram since 2007. Private messages are supposedly excluded, but public data can still reveal personal details. - How does Meta protect my privacy?

They avoid private messages, offer opt-out forms (mostly in the EU), watermark AI images, and let you delete chat history. Critics say these don’t go far enough. - Why are privacy advocates upset?

They argue Meta’s opt-out model, use of old data, and lack of deletion options violate GDPR and erode user control. The AI’s ability to infer sensitive info from public posts is another red flag. - What’s the regulatory response?

In 2024, Meta paused EU AI training after complaints from noyb and regulators. By April 2025, they resumed with EDPB approval, but the opt-out process remains clunky, and scrutiny continues. - How can I protect my privacy?

Tighten privacy settings, opt out if possible, post cautiously, use encrypted messaging like WhatsApp, and stay updated on privacy laws and Meta’s policies. - What’s the big picture for AI and privacy?

AI’s data hunger raises questions about consent and control. While it can revolutionize tech, unchecked data use risks turning users into products. Transparency and user rights are key.

Sources We Trust:

A few solid reads we leaned on while writing this piece.

- Meta’s Privacy Matters for Generative AI Features

- noyb Urges 11 DPAs to Stop Meta’s Data Use for AI

- Meta Resumes EU AI Training After Regulator Approval