MCP vs API: Simplifying AI Agent Integration with External Data

Hey there, tech enthusiasts and code wranglers! Ever wondered how AI agents get their hands on the data they need to be truly helpful? Whether it’s fetching your latest emails, checking the weather, or diving into your project files, AI Agent Integration needs to connect with the outside world. Traditionally, APIs have been the go-to for this, but there’s a new kid on the block: the Model Context Protocol (MCP), introduced by Anthropic in late 2024. Think of MCP as a universal charger for your AI—plug it in, and it works with almost anything.

In this post, we’ll break down how MCP and APIs compare, when to use each, and how they can team up to make your AI agents smarter than ever. Written in Blurbify’s signature style—clear, punchy, and just a bit cheeky—this guide will have you saying, “Ah, now I get it!” Let’s dive in.

Key Takeaways

- APIs are like trusty menus at a restaurant: reliable but rigid, requiring you to know exactly what to order.

- MCP is a dynamic, AI-friendly protocol that lets agents discover tools and data on the fly, like a universal remote.

- Use APIs for fast, predictable integrations where you control the setup.

- Use MCP for flexible, adaptive AI systems that need to handle changing tools or data sources.

- They’re not rivals: MCP often wraps APIs, combining structure with AI superpowers.

- Tools like LangGraph make MCP even easier to use, helping developers build powerful AI workflows.

Why AI Agents Need External Data

AI agents, especially those powered by large language models (LLMs), are brilliant but have a short memory—like a goldfish with a PhD. They only know what’s in their immediate context. To do cool stuff like summarizing documents, querying databases, or booking flights, they need access to external data. APIs have long been the bridge for this, but they can be clunky for AI’s dynamic needs. MCP steps in to make things smoother, offering a standardized way for AI to connect with tools and data without the hassle of custom coding for every service.

APIs: The Classic Way to Connect

APIs (Application Programming Interfaces) are the unsung heroes of the digital world. They let apps talk to each other, like ordering takeout through an app without needing to know the chef’s recipe. RESTful APIs, the most common type, use HTTP methods (GET, POST, etc.) to fetch or send data in formats like JSON. For example, an AI using OpenAI’s API can send a prompt and get a response, but each API has its own rules—endpoints, parameters, and quirks. It’s like learning a new dialect for every town you visit.

While APIs are fast and predictable, they’re static. If an API changes, you’re stuck updating your code. For AI agents that need to juggle multiple data sources, this can feel like herding cats.

MCP: The AI-Friendly Newcomer

Enter MCP, Anthropic’s brainchild designed specifically for LLMs and AI agents. Launched in late 2024, MCP is like a USB-C port for AI—plug it in, and it connects to almost anything, from file systems to databases to external APIs. Unlike APIs, MCP lets AI agents discover available tools and data at runtime, no hardcoding required. It’s built on a client-server model using JSON-RPC, with MCP servers exposing capabilities like reading files or sending emails. Early adopters like Block and Apollo are already using it, and even OpenAI has jumped on board (TechCrunch).

MCP vs API: The Showdown

Here’s how MCP and APIs stack up:

| Feature | Traditional API | MCP |

|---|---|---|

| Purpose | General-purpose data access | Designed for LLM/AI agent use |

| Discovery | Static (hardcoded endpoints) | Dynamic discovery at runtime |

| Standardization | Each API is different | All MCP servers follow the same rules |

| Interface | REST, GraphQL, etc. | JSON-RPC over standardized catalog |

| Adaptability | Manual updates needed | AI agents can auto-adapt |

| Integration Speed | Fast, predictable | More flexible, smart-capable |

MCP’s dynamic discovery is a game-changer, letting AI adapt to new tools without redeploying code. APIs, while reliable, lack this flexibility.

They’re Better Together

MCP and APIs aren’t enemies—they’re like peanut butter and jelly. Many MCP servers wrap existing APIs, making them AI-friendly. For example, an MCP server for GitHub might use GitHub’s REST API under the hood but present it in a standardized way for AI agents. This means you get the structure of APIs with the adaptability of MCP. Pre-built MCP servers for services like Google Maps, Spotify, and Docker make integration a breeze (Anthropic).

When to Use What

- Choose APIs for quick, controlled integrations where you know the endpoints and don’t expect frequent changes.

- Choose MCP when your AI needs to dynamically interact with multiple or changing tools, like in AI-powered IDEs or chatbots.

- Use Both to leverage existing APIs through MCP servers for maximum flexibility.

Tools Like LangGraph Make It Even Easier

Frameworks like LangGraph, a modular tool for building AI workflows, integrate seamlessly with MCP via libraries like langchain-mcp-adapters (LangGraph). This lets developers create AI agents that tap into MCP tools without breaking a sweat, combining the power of structured workflows with MCP’s dynamic capabilities.

Wrapping Up

AI agents need external data to shine, and while APIs offer a solid foundation, MCP gives them superpowers. With dynamic discovery and standardized connections, MCP makes AI integration smoother than a sunny day. Pair it with tools like LangGraph, and you’re ready to build AI that’s as smart as it is adaptable. So, go forth and connect your AI to the world—just maybe keep it away from your inbox!

MCP vs API: Simplifying AI Agent Integration with External Data

Hey there, tech enthusiasts and code wranglers! If you’re reading this, you’re probably either knee-deep in building AI agents or just curious about how these digital helpers can level up their game. Well, buckle up, because today we’re diving into a topic that’s as exciting as it is nerdy: how AI agents can seamlessly integrate with external data and tools. Spoiler alert: it’s not just about APIs anymore. Enter the Model Context Protocol (MCP), Anthropic’s shiny new toy from late 2024, which is like giving your AI agent a universal remote for all things data.

In this post, we’ll compare MCP and traditional APIs, explore how they work, figure out when to use each, and even see how they can team up for maximum AI awesomeness. Written in Blurbify’s signature style—clear, punchy, and just a bit cheeky—this guide will have you saying, “Ah, now I get it!” Let’s dive in.

Why AI Agents Need External Data

AI agents, especially those powered by large language models (LLMs), are brilliant but have a short memory—like a goldfish with a PhD. They only know what’s in their immediate context. To do cool stuff like summarizing documents, querying databases, or booking flights, they need access to external data. APIs have long been the bridge for this, but they can be clunky for AI’s dynamic needs. MCP steps in to make things smoother, offering a standardized way for AI to connect with tools and data without the hassle of custom coding for every service.

The Problem with Context

Imagine you’re an AI agent tasked with helping a developer. Your user asks, “Can you summarize my project files?” or “What’s on my calendar today?” Without access to those files or calendar data, you’re as useful as a paperweight. External data gives AI agents the context they need to provide relevant, actionable responses. The challenge is connecting them to that data efficiently and flexibly.

The Old Way: APIs

For years, APIs have been the go-to for fetching external data. They’re like the trusty menus at your favorite restaurant—you know exactly what you’re getting, but you have to learn the menu for each place you visit. APIs work great for straightforward tasks, but as AI agents get more ambitious, juggling multiple APIs can feel like herding cats.

Part 1: APIs – The Classic Way to Talk to Services

Let’s start with the old guard: APIs (Application Programming Interfaces). If you’ve been in tech for more than five minutes, you’ve probably heard of APIs. They’re like the unsung heroes of the digital world, letting apps talk to each other without needing to know the nitty-gritty details.

What’s an API? (Hint: It’s Not Just Tech Jargon)

Think of an API as a set of rules that lets different software applications communicate. It’s like ordering takeout through a delivery app. You don’t need to know how the restaurant cooks the food or how the delivery person gets to your house; you just need to know what to order and how to pay. That’s what an API does—it abstracts away the complexity and gives you a simple interface to interact with.

In the world of web development, RESTful APIs are the most common. They use standard HTTP methods like GET, POST, PUT, and DELETE to perform operations on resources. For example, to get the current weather, you might send a GET request to a weather API with your location as a parameter, and it returns the weather data in a structured format, like JSON.

APIs and AI: A Match Made in Heaven (Sort Of)

APIs are widely used for connecting all sorts of applications, including LLMs. For instance, if you’re using OpenAI’s API, you can send prompts to their models and get responses back. But here’s the catch: each API is different. You have to learn its specific endpoints, parameters, and authentication methods. It’s like learning a new dialect for every town you visit.

Moreover, APIs are static. Once you’ve integrated an API into your application, if that API changes—say, they deprecate an endpoint or change the response format—you have to update your code accordingly. It’s predictable and structured, but it can also be rigid and time-consuming to maintain, especially if you’re dealing with multiple APIs.

The Pros and Cons of APIs

Here’s a quick rundown of what makes APIs tick:

- Pros:

- Predictable and structured, making them easy to integrate for known services.

- Fast to set up if you’re familiar with the API’s documentation.

- Widely available for almost any service, from weather apps to social media platforms.

- Cons:

- Rigid—changes to the API require manual updates to your code.

- Each API has its own quirks, so there’s no one-size-fits-all solution.

- Not designed with the dynamic, adaptive needs of AI agents in mind.

So, while APIs are great for general-purpose data access, they’re not exactly built for the flexible, ever-changing world of modern AI agents. That’s where MCP comes in.

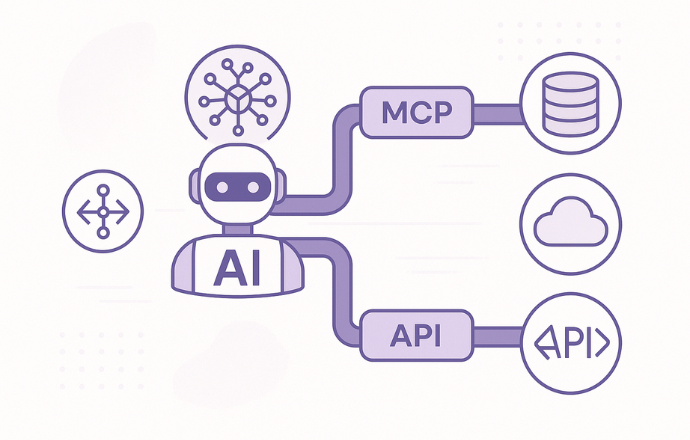

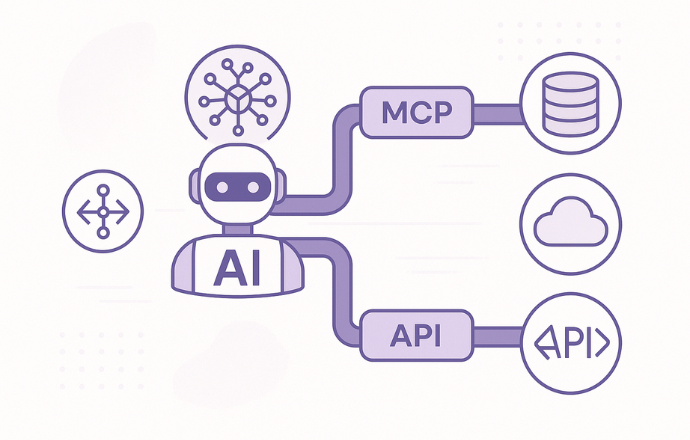

Part 2: Enter MCP – Model Context Protocol

Now let’s talk about the new kid on the block: the Model Context Protocol (MCP). Introduced by Anthropic in late 2024, MCP is specifically designed for LLMs and AI agents. It’s like a universal translator for AI, allowing them to connect to various data sources and tools in a standardized way.

Think of MCP as the USB-C port for LLMs. Just as USB-C allows you to connect different devices with a single type of connector, MCP provides a standard interface for AI models to access external resources. Whether it’s your file system, a database, an email server, or even another API, MCP makes it possible for AI agents to discover and use these resources dynamically.

MCP Architecture: It’s Like a Swiss Army Knife for AI

Let’s break down how MCP works. At its core, MCP follows a client-server architecture:

- MCP Host: This is the application that runs the AI agent, like Claude Desktop or an IDE with AI capabilities.

- MCP Client: This is a 1:1 connection between the host and an MCP server. It’s responsible for communicating with the server on behalf of the agent.

- MCP Server: These are lightweight servers that expose capabilities to the AI agent. They can be pre-built for common services like Google Drive, Slack, or GitHub, or you can create your own for custom data sources.

- Local Data Sources: These include files, databases, and services on your local machine.

- Remote Services: These are external systems accessed via APIs, which can also be wrapped by MCP servers.

The beauty of MCP is that it allows AI agents to discover what tools and resources are available at runtime. Instead of hardcoding the available tools into the agent, you can have the agent query the MCP server to see what’s available and then use those tools as needed. This dynamic discovery means that if you add a new tool or data source, you don’t need to redeploy the agent; it can adapt on the fly.

MCP Primitives: The Building Blocks

MCP defines several primitives that make this possible:

- Tools: These are actions that the AI agent can perform, like

get_weatherorcreate_event. Each tool has a name, description, and parameters. - Resources: These are read-only documents or data that the agent can access, such as database entries or files.

- Prompt Templates: These are predefined prompts that can help guide the agent’s behavior or provide context.

By standardizing these primitives, MCP ensures that AI agents can understand and use tools from different sources without needing custom code for each one.

Dynamic Discovery: The Game-Changer

One of the most exciting features of MCP is dynamic discovery. Imagine walking into a room full of tools, and instead of having to remember everyone’s name, they all introduce themselves to you. That’s what dynamic discovery does for AI agents. They can query an MCP server to see what tools are available and then use them as needed. No more hardcoding endpoints or redeploying code when a new tool comes along.

For example, if you’re building an AI agent for a developer’s IDE, you can use MCP to let the agent access tools like code repositories, debugging tools, or even version control systems. If you add a new tool later, the agent can automatically discover it without any changes to its code.

Real-World Example: File System Integration

To make this concrete, let’s look at an example from Anthropic’s documentation (Anthropic). Suppose you want your AI agent (say, Claude) to manage files on your desktop. With an MCP Filesystem Server, the agent can:

- Write a poem and save it to your desktop.

- Find work-related files in your downloads folder.

- Move all images to a new folder called “Images.”

The agent calls the relevant tools from the MCP server, seeks user approval for actions like reading or writing files, and gets the job done. This is all configured using a simple setup in claude_desktop_config.json with Node.js, making it accessible even for developers new to AI.

Related: What’s This MCP Thing Everyone Might Start Talking About?

Part 3: MCP vs API — The Face-Off

Now that we’ve covered what APIs and MCP are, let’s compare them side by side to see how they stack up, especially in the context of AI agent integration.

| Feature | Traditional API | MCP |

|---|---|---|

| Purpose | General-purpose data access | Designed for LLM/AI agent use |

| Discovery | Static (hardcoded endpoints) | Dynamic discovery at runtime |

| Standardization | Each API is different | All MCP servers follow the same rules |

| Interface | REST, GraphQL, etc. | JSON-RPC over standardized catalog |

| Adaptability | Manual updates needed | AI agents can auto-adapt |

| Integration Speed | Fast, predictable | More flexible, smart-capable |

Breaking Down the Comparison

- Purpose: APIs are built for general data access, from fetching tweets to checking stock prices. MCP is laser-focused on AI agents, making it easier for LLMs to handle context and tools.

- Discovery: APIs require you to know the endpoints upfront, like memorizing a menu. MCP’s dynamic discovery lets AI agents explore available tools at runtime, like a buffet where the dishes introduce themselves.

- Standardization: Every API is a snowflake, with its own rules. MCP servers follow a universal protocol, so once you know one, you know them all.

- Interface: APIs use various protocols (REST, GraphQL), while MCP uses JSON-RPC for a consistent, AI-friendly catalog.

- Adaptability: API changes mean recoding. MCP agents adapt automatically, making them ideal for evolving systems.

- Integration Speed: APIs are quick to set up for known services, but MCP’s flexibility shines when dealing with multiple or changing tools.

MCP’s dynamic discovery and AI-specific design make it a game-changer for building autonomous, adaptable AI systems. APIs, while reliable, are better suited for static, predictable integrations.

Part 4: It’s Not a Fight — It’s a Stack

But here’s the thing: MCP and APIs aren’t enemies; they’re like peanut butter and jelly. Many MCP servers are built on top of existing APIs. For example, an MCP server for GitHub might simply wrap GitHub’s REST API, making it accessible to AI agents through the MCP protocol.

So, think of MCP as an abstraction layer that makes traditional APIs more LLM-friendly. It takes the rigidity of APIs and adds a layer of flexibility and discoverability that’s perfect for AI agents. Moreover, MCP can integrate with a wide range of services. There are pre-built MCP servers for popular tools like Google Maps, Spotify, Docker, and even file systems. This means that with MCP, your AI agent can seamlessly interact with these services without you having to write custom integration code for each one.

Real-World Integrations

Early adopters are already showing what’s possible. Companies like Block and Apollo have integrated MCP into their systems, while development tools like Zed, Replit, and Sourcegraph are using it to enhance their platforms (Anthropic). Even OpenAI has adopted MCP, signaling its potential as an industry standard (TechCrunch).

Part 5: When to Use What?

So, when should you use APIs, and when should you use MCP? Here’s the breakdown:

- Use Traditional APIs when:

- You need speed and predictability.

- You have control over the integration and are okay with hardcoding endpoints.

- The integration is straightforward and unlikely to change frequently.

- Example: Building a weather app that only needs one API for forecasts.

- Use MCP when:

- Your AI agent needs flexibility and can adapt to new tools without redeployment.

- You want dynamic discovery of available tools and resources.

- You’re building a system where the set of tools might change over time.

- Example: An AI-powered IDE that needs to access various code repositories and debugging tools.

- Use Both Together for:

- Maximum flexibility and control.

- Leveraging existing APIs through MCP servers.

- Building AI agents that can interact with a wide range of data sources and tools.

- Example: A chatbot that uses MCP to access multiple services (email, calendar, and APIs) for a seamless user experience.

Hypothetical Scenario

Imagine you’re building an AI assistant for a small business. If it only needs to check inventory via a single API, a traditional API integration is quick and effective. But if the assistant needs to handle inventory, customer emails, and social media posts—and new tools might be added later—MCP’s dynamic discovery and standardized interface make it the better choice. Combine them, and you can use MCP to wrap the inventory API while adding flexibility for future integrations.

Part 6: Tools Like LangGraph Make It Even Easier

Building AI agents can be complex, but tools like LangGraph make it a breeze. LangGraph is a framework for creating modular AI workflows, representing tasks as graphs where nodes are actions or tools and edges define the flow of information. It integrates seamlessly with MCP through libraries like langchain-mcp-adapters (LangGraph).

With LangGraph, you can build AI agents that tap into MCP tools without writing tons of custom code. For example, you could create an agent that uses MCP servers to access Brave Search, GitHub, and local files, all within a single workflow. This modularity and flexibility make LangGraph a perfect companion for MCP, helping developers build powerful, adaptable AI systems.

Example: LangGraph and MCP in Action

A GitHub repository by paulrobello demonstrates how to integrate MCP tools into a LangGraph workflow (MCP LangGraph Tools). The example uses an MCP server for Brave Search, allowing the AI agent to perform web searches dynamically. By setting up a simple graph with agent and tool nodes, developers can create AI systems that adapt to new tools without breaking a sweat.

Related: Generative vs Agentic AI: Bold Disruption or Bright Future?

Conclusion

AI agents are only as good as the data and tools they can access. Traditional APIs provide a solid foundation, like the trusty sidekick you’ve always had—reliable but a bit predictable. MCP, on the other hand, is like the new superhero in town, ready to save the day with its dynamic powers. By offering standardized, AI-friendly connections and dynamic discovery, MCP makes it easier to build agents that are as adaptable as they are smart.

Tools like LangGraph take this to the next level, providing frameworks to create complex AI workflows that leverage MCP’s capabilities. Whether you’re a developer building the next big AI assistant or a tech enthusiast curious about the future, understanding MCP and APIs is key to unlocking AI’s full potential.

So, go forth and connect your AI to the world—just maybe keep it away from your inbox, okay? With MCP, APIs, and a sprinkle of creativity, you’re ready to build AI agents that are as connected as your social media feed and as flexible as a yoga instructor. Happy coding!

FAQ

- What is the main difference between MCP and traditional APIs?

Traditional APIs are general-purpose interfaces for data access, requiring static, hardcoded integrations. MCP, designed for AI agents, offers dynamic discovery and standardized interaction with tools and data sources, making it more flexible for LLMs. - How does dynamic discovery work in MCP?

Dynamic discovery lets AI agents query available tools and resources at runtime. Instead of being pre-programmed with specific endpoints, agents can ask what’s available and use those tools, adapting to changes without redeployment. - Can I use MCP with any LLM, or is it specific to Anthropic’s models?

MCP is an open protocol, usable with any LLM that supports it. While Anthropic introduced it, providers like OpenAI have adopted it, making it a universal standard for AI integration (TechCrunch). - What are some real-world applications of MCP?

MCP powers AI-powered IDEs accessing code repositories, chatbots pulling data from business tools, and custom workflows integrating with databases, file systems, and APIs. Companies like Block and Apollo are already using it (Anthropic). - How does LangGraph integrate with MCP?

LangGraph builds modular AI workflows and integrates with MCP via libraries like langchain-mcp-adapters. This lets agents use MCP tools seamlessly, enhancing their ability to handle diverse data sources (LangGraph). - Is MCP secure, especially since AI agents can perform actions?

Yes, MCP prioritizes security. For example, agents seek user approval before actions like reading or writing files. MCP servers can also restrict access based on permissions, ensuring safe interactions. - Do I need to be an expert in AI to use MCP?

Not at all! MCP is designed for developers, with pre-built servers and clear documentation. Even those new to AI can start integrating MCP into projects with minimal hassle (MCP Documentation).

Sources We Trust:

A few solid reads we leaned on while writing this piece.

- Anthropic’s Model Context Protocol Announcement

- LangGraph MCP Integration Documentation

- OpenAI Adopts Anthropic’s MCP Standard

- Official MCP Documentation and Specification

- LangChain MCP Adapters Changelog

- GitHub: LangGraph MCP Solution Template

- GitHub: LangChain MCP Adapters Library

- GitHub: MCP Tools LangGraph Integration Example

- GitHub: LangGraph MCP Agents with Streamlit

- Reddit: MCP Server Tools LangGraph Integration

- LangChain Blog: MCP Fad or Fixture Debate

- Medium: Building Universal Assistant with LangGraph and MCP

- Medium: Using LangChain with Model Context Protocol

- Forbes: Anthropic’s MCP as AI Integration Advancement

- WillowTree: Is MCP Right for Your AI Development

- Norah Sakal: MCP vs API Explained

- VentureBeat: Anthropic’s MCP for AI Data Integration

- InfoQ: Anthropic Publishes MCP Specification

- Reddit: Anthropic’s MCP Significance Discussion

- YouTube: Building Agents with MCP Workshop